Data engineering for the data lakehouse: Four guiding principles

A rising number of enterprises are adopting the lakehouse to unite their analytics projects and foster innovation on a shared, cloud-based platform.

A rising number of enterprises are adopting the lakehouse to unite their analytics projects and foster innovation on a shared, cloud-based platform. As with any data platform, achieving this goal requires rigorous data engineering to meet fast-changing business demands.

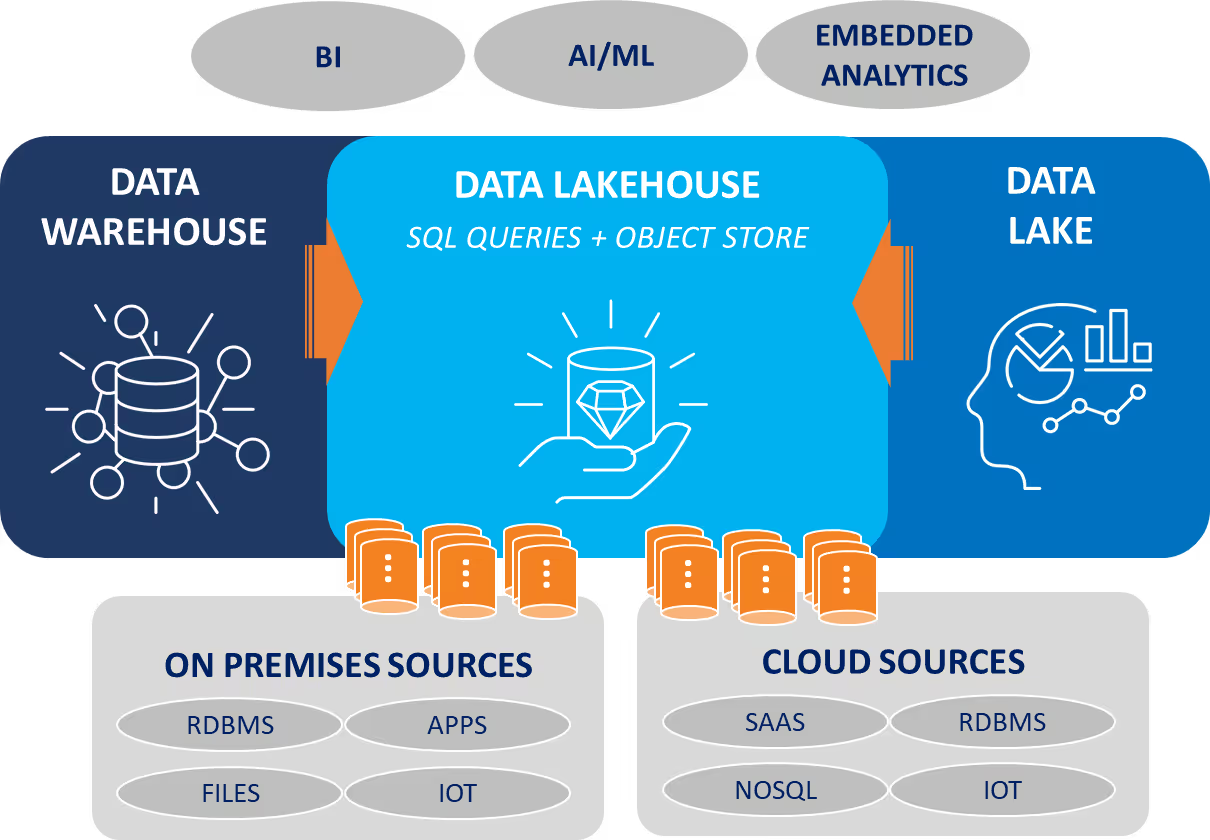

The lakehouse is an emerging paradigm that combines the best elements of both the data warehouse and data lake. Like a data warehouse, the lakehouse helps query, transform, and govern data. Like a data lake, it stores multi-structured data objects and integrates with a rich ecosystem of data science tools. By uniting these elements on elastic cloud infrastructure with Apache Spark as its underlying engine, the lakehouse can support business intelligence (BI), analytics, artificial intelligence and machine learning (AI/ML) workloads across all clouds. Most data platform vendors embraced this notion of convergence after Databricks coined the “lakehouse” term in 2020, although their terminology can vary (Snowflake likes to talk about its Data Cloud).

Still early in the adoption cycle, the lakehouse creates both opportunity and challenge for the modern enterprise. The opportunity is to support more analytics projects with one platform, increasing efficiency and innovation. The challenge is to integrate diverse elements and manipulate diverse datasets without bogging down in complexity. And that challenge falls on data engineering teams. Data engineers must ingest and transform data from sources such as databases, applications, IoT sensors, unstructured files, and social media streams. They must manage that data within the lakehouse to feed a growing number of devices, applications, tools, and algorithms. Even expert data engineers can struggle to build and manage reliable, high-performance data pipelines at scale across these complex elements.

This blog recommends four guiding principles for effective data engineering in a lakehouse environment. The principles are to (1) automate processes, (2) adopt DataOps, (3) embrace extensibility, and (4) consolidate tools. Let’s explore each in turn, using the diagram below as reference.

The Modern Data Lakehouse Environment

Automate.

To manage a mountain of work, data engineers need automated pipeline tools that reduce repetitive and error-prone work. These tools provide a graphical interface that hides the complexity of configuring, deploying, monitoring, adapting, and optimizing pipelines. The more data engineers can use and re-use standard pipeline artifacts with a mouse rather than a keyboard, the more productive they become. This frees up time for them to design custom pipelines, for example to handle complex transformation jobs that filter, merge, and reformat tables for real-time analytics.

Automation also enables enterprises to democratize data access, preparation, and consumption. Armed with automated tools, data scientists or business-oriented analysts within business units can manage pipelines themselves. They can use their intuition and domain knowledge of the subject matter to create visual pipeline designs, perform basic transformations, and deliver that data to BI tools or ML models alike. They inspect the results, learn, and iterate, with little or no dependency on the expert data engineers within IT.

Adopt DataOps.

The discipline of DataOps helps data engineering teams optimize data pipelines by applying principles of DevOps, agile software development, and total quality management. DataOps comprises continuous integration and deployment (CI/CD), testing, data observability, and orchestration.

- CI/CD. Iterate both pipelines and datasets to maintain quality standards while relying on a single version of truth for production.

- Testing. Validate pipeline functionality by inspecting code, assessing behavior, and comparing results at each stage of development and production.

- Data observability. Monitor and optimize data quality as well as data pipeline performance. Inspect the lineage of datasets from source to target.

- Orchestration. Schedule, execute, and track workflows across pipelines and the applications that consume their outputs.

A DataOps program as described above helps cross-functional data teams ship higher-quality data products with confidence.

Embrace extensibility.

Data engineers need extensible pipelines that can accommodate inevitable changes to sources, targets, and projects. They might need to download an algorithm from an open source library or develop their own script to handle a complex transformation job. They might need to migrate data from one cloud to another, then reformat it for specialized ML model training on the new cloud provider’s platform. Changes like these require open APIs, open data formats, and the ability to use and reuse elements across a broad ecosystem. Data pipeline tools must support this level of extensibility and overcome the proprietary formats or interfaces of proprietary vendors.

Consolidate tools.

Data engineering teams can reduce the complexity of modern data environments by consolidating their pipeline tools. For example, a host of startups and some established vendors support data ingestion, transformation, and DataOps with a single tool. Enterprises that adopt such tools can reduce training time and improve productivity by fostering team collaboration. Tool consolidation is more feasible in cloud environments than on premises because heritage systems such as mainframes require specialized tools and interfaces.

Realizing the Promise of the Lakehouse with Low Code

Enterprises that automate their data engineering work, adopt the discipline of DataOps, design extensible data pipelines, and consolidate their data engineering tools can position themselves to realize the promise of the lakehouse. One example of a converged pipeline tool is Prophecy, a low-code data transformation platform whose visual pipeline builder configures complex pipelines while auto-generating the underlying PySpark, Scala, or SQL code.

To learn more about how the healthcare data firm HealthVerity uses Prophecy–and applies many of the principles described here–check out my recent webinar with them.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar