The Complete Data Ingestion Guide for Modern Data Strategy

Learn how data ingestion transforms scattered information into powerful insights. This comprehensive guide covers ingestion types, tools, and best practices to overcome common challenges in your data strategy.

This article was refreshed on 05/2025.

Data is everywhere, streaming in from all corners. The real challenge? Bringing it all together in a way that makes sense and drives actionable insights. That's where data ingestion comes into play.

Data ingestion consolidates data from multiple source systems and transforms disparate formats into a consistent schema that your analytics tools can interpret.

Let's dive into the world of data ingestion—what it is, why you need it, and how to navigate its challenges so your data works for you instead of creating more headaches.

What is data ingestion?

Data ingestion is the process of gathering data from scattered sources and bringing it into a single system where it becomes useful. It's the foundation of any solid data pipeline—skip this step, and nothing downstream will work properly.

Data ingestion takes your raw data, often through various data extraction methods, loads it, and prepares it for action in your database, warehouse, or data lake. The goal is simple: get that data into a format and location where you can work with it.

Without taking all the steps to ensure proper ingestion, you're left with disconnected pieces that tell an incomplete story—or worse, no story at all.

Data ingestion vs. data integration

While often mentioned in the same breath, data ingestion and data integration serve distinct purposes in the data management lifecycle. Data ingestion is primarily concerned with collecting and importing data from various sources into a storage system—it's the "gathering" phase.

On the other hand, data integration is a broader process that combines data from different sources to provide a unified view—the "combining" phase.

How is data ingestion different from data integration? Think of data ingestion as the transportation network bringing raw materials to a factory, while data integration is the factory itself, assembling those materials into a cohesive finished product.

Ingestion focuses on efficiently and reliably moving data, while integration focuses on connecting, transforming, and harmonizing that data into a meaningful whole.

An asset management company might ingest customer purchase data from their website, inventory data from their warehouse system, and customer support interactions from their help desk. Data integration would then combine these separate streams into a comprehensive customer profile that provides 360-degree visibility.

Data ingestion is a prerequisite for integration—you can't integrate what you haven't collected. However, effective integration requires additional considerations beyond ingestion, including data mapping, transformation rules, and semantic alignment.

Types of data ingestion

A guide to data ingestion wouldn't be complete without breaking down the main approaches to getting data into your systems:

- Batch Processing: In batch processing, data is collected over a period and then processed all at once. This is comparable to incremental table loads that run during off-peak hours, processing accumulated transaction data from OLTP systems into your data warehouse. Ideal for scenarios where data freshness requirements are in hours rather than seconds.

- Real-Time Processing: Data is ingested and processed instantly. Similar to implementing CDC pipelines that capture database changes and propagate them to downstream systems with sub-second latency. Critical for operational dashboards, fraud detection systems, or any use case where delayed insights impact business operations.

- Lambda Architecture: Combines both batch and real-time processing in a unified architecture. This resembles implementing both Spark batch jobs for historical data processing and Spark Streaming for real-time data using tools like PySpark for big data processing, allowing you to serve both historical analytics and real-time operational needs from a single system.

- Change Data Capture (CDC): Focuses on tracking and capturing changes in data in real-time. This is equivalent to monitoring database transaction logs to efficiently extract only modified records rather than performing resource-intensive full table scans, significantly reducing processing overhead and network traffic.

- Streaming Data Ingestion: Handles data that flows continuously from sources. Think of processing millions of events per second from web servers, IoT sensors, or application logs through technologies like Kafka Connect, ensuring data is available for analysis with minimal latency.

- Cloud-Based Data Ingestion: Utilizes cloud services to ingest data, offering the flexibility and scalability that cloud platforms provide. This is like implementing serverless ETL workflows with AWS Glue or Azure Data Factory that automatically scale to handle variable workloads without provisioning infrastructure.

- Hybrid Data Ingestion: Bridges the gap between cloud and on-premises sources. Similar to deploying data gateway services that securely extract data from on-premises databases protected by firewalls and load it into cloud data platforms, maintaining a single source of truth across hybrid environments.

Data ingestion's use cases in analytics and business intelligence

Data ingestion isn't just a technical task—it's the foundation that supports your entire analytics operation. Without properly ingested data, your analytics efforts are like trying to query from empty tables.

When you efficiently pull data into your storage systems, you ensure everyone has consistent, reliable information. This availability is crucial for generating the insights that drive smart decisions.

Data ingestion also plays a role in democratizing access across your organization, helping to enhance data literacy. Suddenly, people who aren't data engineers can tap into self-service analytics platforms. Marketing, sales, operations—everyone gains the ability to use data without waiting for custom ETL jobs to be written.

By eliminating data silos, proper ingestion supports everyone from analysts to executives, streamlining processes and helping your organization make better decisions faster. In industries like healthcare, this enables data-driven insights that lead to better patient outcomes.

Franco Patano, Lead Product Specialist at Databricks, highlighted how healthcare organizations are completely revolutionizing their data operations by modernizing their ingestion processes.

When data flows smoothly from source to insight, clinical teams can focus on improving care rather than wrestling with fragmented information systems. This shift doesn't just improve analytics—it directly impacts patient outcomes through faster, more accurate decision-making.

The benefits of efficient data ingestion

Efficient and complete data ingestion changes the game for organizations. Here's why it matters:

- Fast Data Access: When data comes in quickly, you can start analyzing sooner. This is like the difference between querying pre-aggregated tables versus computing aggregations on the fly.

- Improved Data Quality: Automation reduces human error. Clean, reliable data leads to better outcomes, just as well-maintained database indexes lead to better query performance.

- Operational Efficiency: Streamlined data flow cuts down on delays between collection and analysis. This efficiency is similar to using incremental loads instead of full table scans for each ETL or ELT run.

- Scalability: Efficient ingestion processes can handle growing data volumes without breaking a sweat. It's like having a well-partitioned data lake that continues to perform well as petabytes of data are added.

- Better Decision-Making: Access to accurate data quickly means your decisions are based on solid ground. This is comparable to having reliable metrics from your monitoring systems when deciding how to optimize your data infrastructure.

Data ingestion in ETL versus ELT

Data ingestion includes the critical processes of extracting data, loading data, and transforming data. These have been traditionally called ETL and done in the order. Data is extracted from a source system, transformed as a separate process to match the destination, and then loaded. By mastering ETL processes on platforms like Databricks, teams can better leverage cloud processing and have the flexibility to transform data to accommodate multiple uses.

Modern data cloud systems like Databricks enable teams to switch the steps and extract, load, and then transform the data. This is particularly powerful with the structured and unstructured data types needed to power AI and next-generation analytics.

When using an ELT methodology, you use the data warehouse to power speedy ingestion.

Data ingestion tools and applications

The right approach to data ingestion can significantly impact how effectively your organization leverages its data assets. Here's an overview of key technologies that power modern data pipelines.

Open-source ingestion frameworks

Open-source frameworks offer flexibility and community support for organizations building custom ingestion solutions. Apache Kafka stands out as the industry standard for high-throughput, fault-tolerant data streaming, processing millions of messages per second for companies across industries.

Apache NiFi provides a web-based interface for designing, controlling, and monitoring data flows with drag-and-drop simplicity, making it accessible to less technical users.

For batch processing, tools like Apache Spark provide powerful capabilities for processing large volumes of data efficiently, working seamlessly with modern database types to store and manage data.

Similarly, Apache Sqoop specializes in transferring data between Hadoop and structured databases, while Apache Flume is optimized for collecting, aggregating, and moving large amounts of log data.

Organizations leveraging these tools benefit from extensive documentation, active development communities, and the ability to customize functionality to meet specific requirements.

Data platforms ingestion services

Modern data platforms like Databricks have revolutionized data ingestion with managed services that eliminate infrastructure headaches. Databricks' native capabilities enhance these approaches with features like Auto Loader for continuous data ingestion, Delta Live Tables for reliable ETL pipelines, and seamless integration with streaming technologies.

These tools enable organizations to build scalable, reliable ingestion pipelines that feed directly into their analytics and AI workloads without complex infrastructure management.

David Jayatillake, VP of AI at Cube, emphasized during a recent webinar that the convergence of cloud-native services with AI-powered automation is dramatically reshaping how organizations approach data ingestion. Modern platforms don't just store data—they intelligently optimize how it flows through your entire ecosystem, reducing the need for manual intervention while increasing reliability.

Using data platforms like Databricks delivers compelling advantages: automatic scaling to handle volume spikes, pay-as-you-go pricing to control costs, and simplified management through console interfaces. Financial services firms leverage these tools to process market data feeds in real-time, while retailers use them to adjust inventory based on sales patterns.

The data ingestion pipeline and process

Understanding the steps of data ingestion helps you build a reliable process. Let's walk through each phase of getting your data from scattered sources to a usable state.

Data discovery

Before you can work with data, you need to know what you have. Data discovery is about mapping your data landscape—taking inventory before starting the project.

What data sources do you have access to? OLTP databases? Log files? API endpoints? Message queues? Each source offers different insights. An e-commerce platform might analyze database transaction logs to understand purchase patterns, while a SaaS company could use API call logs to measure feature adoption.

Data acquisition

Once you've identified your data sources, it's time to collect the data. Picture yourself connecting various data streams: production PostgreSQL databases through CDC connectors, REST API endpoints through scheduled jobs, or Kafka topics through streaming consumers.

A fintech company might collect transaction data from a payment processor API while simultaneously ingesting customer data from their CRM database.

The goal is to efficiently capture all relevant data, regardless of format or source, to build a comprehensive dataset for the next steps in your pipeline.

Data validation

With data in hand, you need to verify its quality. Data validation checks for accuracy, consistency, and completeness—think of it as running data quality tests before allowing data to enter your warehouse.

You might check for missing values, inconsistent formats, or duplicates. A healthcare data pipeline might validate patient IDs against a master patient index, while a financial system verifies transaction amounts that fall within expected ranges.

Data loading

You need to get transformed data to where it can be used. Traditionally, data pipelines followed ETL methodology, transforming data before loading it into the destination.

But today's companies need faster insights, which is where ELT comes in. By loading raw data first and transforming it within the target system, you leverage the power of modern data warehouses and get quicker access.

This approach, combined with visual low-code data pipeline tools, democratizes data access—different teams can work with the data without waiting for transformations, reducing the burden on data engineers.

Data transformation

Finally, to get value from the data you load, you need to shape validated data into a format ready for analysis. This might involve normalizing values, aggregating information, or enriching it with additional context.

You might standardize timestamp formats across sources or join customer data with geographic information. A data engineer at a retail company might transform raw point-of-sale data into a star schema optimized for OLAP queries.

Transformation ensures your data tells a consistent, meaningful story, making it easier to extract insights and make informed decisions.

Key challenges in data ingestion

Data ingestion isn't always smooth sailing. Let's look at some common obstacles you might face and how to overcome them.

Data quality, volume, and variety

One of the biggest challenges is maintaining data quality while handling massive volumes and diverse data types. Poor-quality data leads to flawed analytics and bad decisions, creating a ripple effect throughout your entire data ecosystem.

When pulling data from multiple sources, each with its own schema and constraints, you'll encounter missing values, duplicates, and conflicting information. For example, customer names might be formatted differently across your CRM, e-commerce platform, and marketing automation tools.

The volume challenge compounds this issue. As your data grows exponentially – think IoT devices generating terabytes daily or clickstream data from millions of users – traditional validation methods become impractical. Processing windows that worked for gigabytes of data may timeout with terabytes.

Variety adds another layer of complexity. Structured data from relational databases, semi-structured JSON from APIs, unstructured text from customer feedback, and binary data from media files all require different handling approaches. Each format introduces unique quality concerns that standard validation rules can't address uniformly.

To overcome these challenges, establish robust validation and cleansing processes early in your pipeline. Implement automated quality checks and define clear data quality SLAs for each source, and implement monitoring to ensure compliance. Consider implementing data catalogs and metadata management systems to track lineage and quality metrics across your data ecosystem.

Scalability and performance

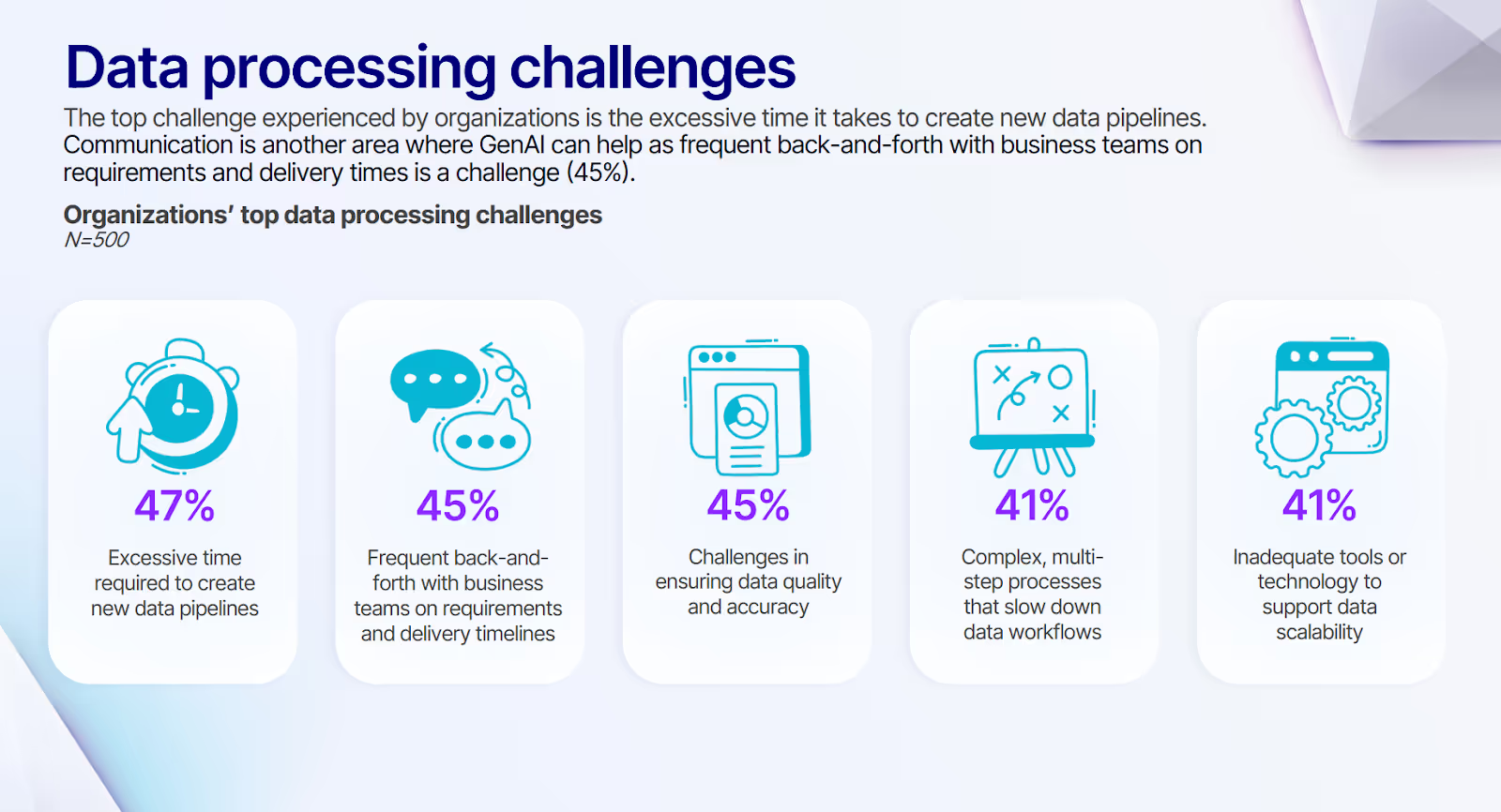

Systems that handle gigabytes efficiently can buckle under terabytes or petabytes, creating bottlenecks that ripple throughout your entire data infrastructure. According to our survey, 41% of organizations cite "inadequate tools or technology to support data scalability" as their top data processing challenge.

This technological gap becomes increasingly problematic as data volumes grow exponentially, forcing teams to choose between data freshness and completeness.

Consider a retail company that experiences 10x traffic during holiday seasons. Their ingestion pipelines must scale seamlessly to handle this surge without manual intervention. Similarly, financial institutions processing transaction data face strict SLAs – delays of even minutes can impact fraud detection systems and customer experience.

Performance bottlenecks manifest in various ways. Network bandwidth limitations might throttle data transfer rates from remote sources. Compute resources may become oversubscribed during peak processing times, causing jobs to queue.

Storage I/O can become a constraint when writing large volumes to disk. These bottlenecks delay data availability, creating frustration when stakeholders need insights now, not hours after the nightly batch job completes.

The architectural decisions you make early on can either enable or restrict scalability. Tightly coupled systems might work initially, but become brittle as data volumes grow. Monolithic ingestion processes can't take advantage of parallel processing, creating single points of failure that limit throughput.

To address these challenges and to follow data pipeline best practices, consider implementing performance optimization techniques such as partitioning data for parallel processing, using columnar storage formats for analytical workloads, and leveraging data compression to reduce transfer times.

Consider implementing incremental processing patterns that only handle changed data rather than reprocessing entire datasets.

Investing in flexible infrastructure ensures consistent performance regardless of data volume, much like how proper partitioning strategies help databases maintain performance as tables grow.

Security and compliance

Data in transit presents significant security vulnerabilities that sophisticated threats increasingly target. Without robust security measures, sensitive information becomes exposed to unauthorized access, potentially leading to devastating data breaches, regulatory penalties, and irreparable reputational damage.

The security challenge extends beyond simple encryption. Consider healthcare organizations handling protected health information (PHI) – they must maintain HIPAA compliance throughout the entire ingestion process. Global companies face a patchwork of regulations like GDPR in Europe, CCPA in California, and LGPD in Brazil, each with specific requirements for data handling.

You must track who has accessed what data, when, and for what purpose. This audit trail isn't just good practice – it's often a regulatory requirement. Additionally, implementing proper data classification ensures appropriate controls are applied based on sensitivity levels.

Implement comprehensive security controls throughout your data ingestion pipelines. Encrypt data both in transit (using TLS/SSL) and at rest (using AES-256 or similar standards). Implement secure authentication mechanisms using industry standards like OAuth 2.0 or SAML.

Work closely with your security and legal teams to ensure compliance with relevant regulations. Implement data governance frameworks that enforce policies automatically rather than relying on manual processes. Consider technologies like data masking or tokenization for sensitive fields, allowing analysis while protecting individual identities.

Regularly audit your ingestion processes against security best practices and regulatory requirements. Implement automated compliance checks that can alert you to potential issues before they become violations.

Connectivity and resilience

Reliable connections between data sources and ingestion systems form the critical backbone of your data infrastructure. When these connections fail – and they inevitably will – the consequences cascade throughout your entire data ecosystem, potentially leading to incomplete datasets, analytical blind spots, and, ultimately, flawed business decisions.

Connectivity challenges manifest in numerous ways. Network interruptions might occur due to infrastructure issues, provider outages, or maintenance windows. API rate limits could throttle your data collection, especially when dealing with third-party services.

Source systems might experience downtime or performance degradation during peak usage periods. Each scenario threatens the completeness and timeliness of your data.

The resilience challenge extends beyond simple connectivity. Consider an e-commerce platform experiencing ingestion failures during peak shopping periods that might make inventory decisions based on incomplete sales data.

Recovery from failures presents its own complexities. How do you identify exactly what data was missed? How do you backfill without creating duplicates? How do you ensure downstream systems handle late-arriving data correctly? These questions demand sophisticated solutions beyond simple retry logic.

To build truly resilient ingestion systems, implement a multi-layered approach to fault tolerance. Start with robust error handling that can differentiate between transient and permanent failures, applying appropriate retry strategies for each. Implement circuit breakers that prevent cascading failures when source systems become unresponsive.

Design for idempotency – ensuring that operations can be safely retried without creating duplicate data or side effects. Implement checkpointing mechanisms that track ingestion progress, allowing processes to resume from the last successful point rather than starting over.

Build comprehensive monitoring and alerting systems that detect failures quickly and provide actionable information for remediation. Consider implementing dead letter queues that capture failed records for later processing and analysis.

For critical data sources, implement redundant ingestion paths that can continue functioning even if primary systems fail. This might involve geographic distribution of ingestion services or alternative data collection methods that can serve as backups.

Data ingestion and the ETL modernization imperative

The data landscape has changed dramatically. Most data used to be neatly structured in relational databases, but today, we're increasingly tasked with processing unstructured data—JSON logs, XML feeds, binary files, and streaming events.

This diversity creates real challenges for legacy ingestion processes. Trying to process semi-structured JSON with tools designed for fixed-schema databases is like trying to parse XML with regular expressions—technically possible but unnecessarily painful.

Modern companies need tools that adapt to all data types. Solutions like Databricks Delta Lake provide a unified approach to managing both structured and unstructured data, helping engineers navigate this complexity and undertake data modernization.

Embracing these modern solutions isn't optional for staying competitive—it's essential as the data landscape continues to evolve.

Power data transformation after data ingestion

While Databricks tackles data ingestion challenges, data engineers still need efficient ways to transform and share insights. That's where visual, low-code tools like Prophecy make a difference.

Prophecy helps by:

- Simplifying Workflow Creation: With drag-and-drop interfaces, you can build Spark pipelines without writing complex code, similar to how modern BI tools allow non-technical users to create visualizations.

- Speeding Up Development: Low-code environments reduce development time, so you get insights faster.

- Enhancing Collaboration: Teams can work together seamlessly, breaking down silos between data engineers and analysts through version-controlled pipelines.

- Improving Data Quality: Built-in validation and testing ensure your data is reliable, similar to how schema validation ensures database integrity.

- Scaling with Ease: Prophecy integrates with existing systems, making it easier to handle growing data needs without rewriting your entire data infrastructure.

- Empowering Non-Engineers: By simplifying the process, more people can participate in data workflows, enjoying low-code SQL benefits, and democratizing access much like how SQL opened database querying to non-programmers.

To accelerate insights from your data while reducing the burden on overwhelmed engineering teams, explore The Future of Data Transformation to discover how low-code solutions democratize data access without sacrificing quality or control.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar