A Guide to Delta Lake’s Optimization Techniques

Discover key strategies to enhance Delta Lake performance on Databricks. Optimize schemas, partitioning, Z-ordering, file sizes, data lifecycle, and more.

Organizations today manage petabytes of data across their cloud environments, making performance optimization essential even for advanced platforms like Delta Lake. As data volumes grow exponentially, even the most robust systems face challenges delivering insights at the speed business requires.

Delta Lake serves as the reliable foundation for Databricks, bringing ACID transactions, schema enforcement, and time travel capabilities to data lakes. As Jason Pohl, Director of Data Management at Databricks, emphasized, "As your Delta Lake deployment scales, you need to optimize performance to maintain efficiency”.

In this article, we explore Delta Lake and eight proven optimization techniques that will help you enhance Delta Lake performance, reduce cloud costs, and improve query response times, enabling you to unlock the full potential of your Databricks environment.

What is Delta Lake?

Delta Lake is an open-source storage layer that brings reliability, performance, and data management capabilities to data lakes. It provides ACID transactions, scalable metadata handling, time travel, schema enforcement, and unified batch and streaming data processing on top of existing storage systems like HDFS, S3, or Azure Data Lake Storage.

By leveraging a transaction log architecture, Delta Lake ensures data consistency and enables features like snapshot isolation and rollbacks. The system is designed to handle the complexities of modern data management while maintaining compatibility with Apache Spark APIs, making it accessible to existing Spark users and applications.

While Delta Lake provides powerful capabilities out of the box, performance bottlenecks inevitably emerge as query complexity increases and concurrent user demands grow. Left unaddressed, these issues translate directly to higher cloud costs, delayed insights, and frustrated business users.

Eight optimization techniques to enhance Delta Lake performance

Let's explore eight proven strategies to optimize your Delta Lake implementation for maximum performance.

1. Design optimized table schemas

Smart schema design dramatically impacts Delta Lake query performance. By picking the right data types and structuring your tables wisely, you'll see faster queries and lower compute costs across your data platform. The foundational aspects of schema design deserve careful consideration before implementing any Delta Lake solution.

Start with appropriate data types for your columns. Use integers instead of strings for IDs and numeric values when possible. This cuts storage needs and speeds up comparisons. Put your most frequently queried fields first to make data retrieval faster. When designing schemas, think about how data will be accessed rather than just how it's generated.

Be careful with nested structures. They can improve organization, but too much nesting complicates queries and slows things down. Flatten your schema when it makes sense for your query patterns. Consider the balance between storage efficiency and query simplicity when determining your schema structure.

Both engineers and analysts benefit from optimized schemas. Engineers get better performance and lower costs, while analysts enjoy faster query responses for their dashboards and reports.

The investment in thoughtful schema design pays dividends throughout the lifecycle of your data assets, making it one of the most impactful optimizations you can implement. Regular schema reviews should be part of your data governance practice, ensuring that as usage patterns evolve, your schema designs remain optimal.

2. Implement effective partitioning strategies

Partitioning is a game-winning technique for optimizing Delta Lake performance. It lets Databricks read only relevant data segments, cutting query time and resource use dramatically. The trick is choosing partition columns that match your query patterns while avoiding common partitioning pitfalls.

Date fields make perfect partition columns for time-series analysis. Geographic analysis works best when partitioned by region or country. But finding balance is crucial. Too many partitions create small file problems, while too few limit query pruning. This balance requires understanding both your data characteristics and how users typically query the information.

Aim for partition sizes in the gigabyte range when implementing this strategy. This ensures efficient data distribution without overwhelming your metadata management. Consider the cardinality of potential partition columns and how evenly distributed your data will be across those partitions.

Your partitioning strategy should evolve with your data and query patterns. Keep an eye on query performance and adjust as needed. Delta Lake's transaction logs and metadata inspection offer valuable insights to refine your approach over time. Periodically review partition effectiveness by analyzing query patterns across your organization.

Advanced partitioning strategies might involve composite partitioning using multiple columns or dynamic partitioning approaches based on data arrival patterns. The ideal solution depends on your specific workload characteristics and should be revisited as your data volumes and query patterns evolve.

3. Leverage data skipping with z-ordering

Z-ordering is Delta Lake's multi-dimensional clustering technique that boosts query speed without partitioning's limitations. This powerful method physically reorganizes data for faster access, especially helpful with high-cardinality columns or queries filtering on multiple dimensions where traditional partitioning becomes impractical.

To implement Z-ordering, use the OPTIMIZE command with the ZORDER BY clause:

OPTIMIZE table_name ZORDER BY (col1, col2)This command reorders data based on the specified columns, putting related information in the same files. This makes Delta Lake's data skipping algorithms more effective, letting queries bypass large chunks of irrelevant data. The technique works by interleaving the bits of multiple column values, creating a Z-order curve that preserves locality across multiple dimensions.

Z-ordering works wonders where traditional partitioning fails due to high cardinality. It enables efficient data skipping on multiple columns simultaneously without creating an explosion of small files. This is particularly valuable for analytical queries that filter on several dimensions concurrently.

The impact on query performance can be substantial for analytics workloads. This speed boost helps business analysts who need quick responses to ad-hoc queries, enabling faster decision-making. When choosing columns for Z-ordering, prioritize those frequently used in query predicates and consider columns often used together in the same queries.

Unlike partitioning, which requires careful upfront planning, Z-ordering can be applied and adjusted after data is loaded, making it a more flexible optimization technique that can evolve alongside your understanding of query patterns.

4. Optimize file sizes and compaction

The "small files problem" can tank Delta Lake's performance—thousands of tiny files create metadata overhead and slow down queries. This issue often comes from frequent writes, especially in streaming or batch updates, with many small transactions creating individual files in the lake.

To solve this issue, Delta Lake also uses the OPTIMIZE command for file compaction:

OPTIMIZE my_table WHERE date = '2025-04-10' ZORDER BY (eventType)This command combines small files while reordering data for faster queries. Ideal file size typically ranges from 256MB to 1GB, balancing metadata overhead against parallel processing efficiency. The specific optimal size depends on your cluster configuration and query patterns.

Compaction delivers real performance gains by reducing the work needed to scan data and manage file metadata. When file counts grow too large, even the Delta Lake transaction log can become a bottleneck during operations that need to list or scan files.

For business users, optimized file sizes mean faster queries, lower cloud costs, and snappier dashboards. Data engineers should schedule regular optimization jobs, especially for frequently updated tables, to keep performance high. For organizations looking into data prep modernization options, optimizing file sizes and compaction is a key consideration.

Furthermore, auto-compaction can further reduce the maintenance burden. You can enable it at the table level:

ALTER TABLE delta_table SET TBLPROPERTIES ('delta.autoOptimize.autoCompact' = 'true');This feature automatically optimizes file sizes during or after writes, reducing manual work. The system intelligently determines when compaction will provide benefits and runs the operations in the background, maintaining performance without explicit intervention. Consider implementing file size monitoring to detect when tables might benefit from compaction.

5. Implement efficient data lifecycle management

Delta Lake's time travel and versioning capabilities are fantastic, but they affect performance and storage costs if left unmanaged. Good data lifecycle management keeps Delta Lake running smoothly while maintaining data integrity and compliance over time.

The VACUUM command helps manage Delta Lake's data lifecycle by removing files no longer needed for time travel or rollbacks:

VACUUM delta_table RETAIN 7 DAYSThis command removes files older than 7 days, freeing storage space and potentially improving query performance. Be careful with retention periods, though. Longer retention allows more historical access but increases costs, while shorter periods save on storage but limit historical queries. Your approach should be guided by specific business requirements and compliance obligations.

When setting up VACUUM, think about your compliance needs and typical query patterns. Financial companies with large financial data might need longer retention for auditing, while real-time sensor data could have shorter retention periods. The retention strategy should align with your broader data governance policies.

Moving older, less-accessed data to lower-cost storage tiers is another smart strategy. You keep data available for occasional queries while reducing costs. This approach balances performance, cost, and accessibility through a tiered storage architecture.

With good data lifecycle practices, your active data layer stays fast while still supporting self-service analytics. Consider implementing automated processes that track data access patterns and help determine optimal lifecycle policies based on actual usage rather than assumptions. This data-driven approach ensures your management practices evolve alongside your organization's needs.

6. Use Delta cache and adaptive query execution

Delta Lake uses powerful caching to speed up repeated queries. The disk cache reduces I/O by keeping frequently accessed data, while the memory cache holds hot data ready for use. To turn on disk caching:

SET spark.databricks.io.cache.enabled = true;This simple setting leads to significant speed gains, especially for exploratory analysis where similar queries run repeatedly. The caching system works by recognizing data that's frequently accessed and ensuring it remains easily available, reducing the need to read from slower storage systems repeatedly.

Similarly, Adaptive Query Execution (AQE) dynamically optimizes query plans based on runtime statistics. It adjusts join strategies, partition sizes, and even rewrites query predicates on the fly. To give AQE accurate statistics to work with, regularly run:

ANALYZE TABLE my_table COMPUTE STATISTICS FOR ALL COLUMNS;This updates column-level statistics, enabling more efficient query optimization. The statistics provide the query optimizer with crucial information about data distribution, allowing it to make informed decisions about execution strategies.

Delta caching and AQE together yield impressive results, particularly for complex analytical workloads with multiple joins and aggregations. The combination addresses both I/O bottlenecks through caching and processing inefficiencies through adaptive execution plans, greatly enhancing data transformation with Databricks.

For business teams, these optimizations create more responsive dashboards and faster data exploration. Queries that once needed careful planning can now run ad hoc, supporting more agile, data-driven decision-making. These performance improvements are particularly valuable in interactive analytics scenarios where analyst productivity depends directly on query response times.

7. Optimize Merge and Update operations

Delta Lake's MERGE operations enable efficient upserts but can create performance issues if implemented poorly. To optimize these operations, focus on pre-filtering source data, using join conditions effectively, and batching changes appropriately for maximum efficiency.

For MERGE operations, use WHERE clauses to limit the scope:

MERGE INTO target_table

USING source_table

ON target_table.id = source_table.id

WHEN MATCHED THEN UPDATE SET target_table.value = source_table.value

WHERE target_table.date > '2025-01-01'This reduces the amount of data scanned and processed during the merge. Pre-filtering both source and target data minimizes the processing overhead and improves overall operation efficiency.

Poorly executed merge operations lead to excessive file rewrites, versioning overhead, and fragmentation. Try using low shuffle merge techniques to mitigate these issues. This optimization excludes unmodified rows from shuffle operations, cutting costs and improving performance.

Batch your changes appropriately for merge efficiency, instead of frequent small merges, group updates into larger batches. This reduces the number of merge operations and associated overhead. The optimal batch size depends on your specific workload characteristics and available resources.

These optimization techniques can significantly reduce data pipeline completion times. This means more current data for analysis and decision-making, particularly helpful for operational analytics where data freshness matters most. Consider implementing monitoring for merge operations to track their performance and identify opportunities for further optimization.

8. Continue to monitor and optimize query patterns

Watching query patterns and performance metrics is crucial for ongoing Delta Lake optimization. By tracking metrics like execution time, bytes scanned, and shuffle data, you can spot bottlenecks and tune your data platform for peak performance. Establishing a performance monitoring practice provides the insights needed for continuous improvement.

Databricks offers excellent tools for monitoring query performance through its query history and metrics UI. These help you identify frequently run and resource-intensive queries that need optimization. By analyzing query patterns across your organization, you can prioritize optimization efforts based on business impact and resource consumption.

Watch out for common query problems like SELECT * operations, inefficient joins, and missing filter pushdown. Fixing these queries can dramatically improve performance. Simply replacing SELECT * with specific column selections reduces unnecessary data scanning. Look for opportunities to rewrite queries to better leverage Delta Lake's optimization capabilities.

Good query optimization makes your entire data platform more responsive and cost-effective. Business analysts running exploratory queries benefit most, as optimized patterns turn sluggish operations into near-real-time insights. The performance improvements directly translate to better analyst productivity and more timely business decisions.

Query optimization connects directly to governance and cost management. Help business teams understand how their query patterns affect system performance and cloud costs. This awareness often leads to more thoughtful query design and better resource use across the organization. Consider implementing query standards and best practices training to cultivate a performance-oriented data culture.

Transform data operations in Databricks with an AI-powered, visual solution

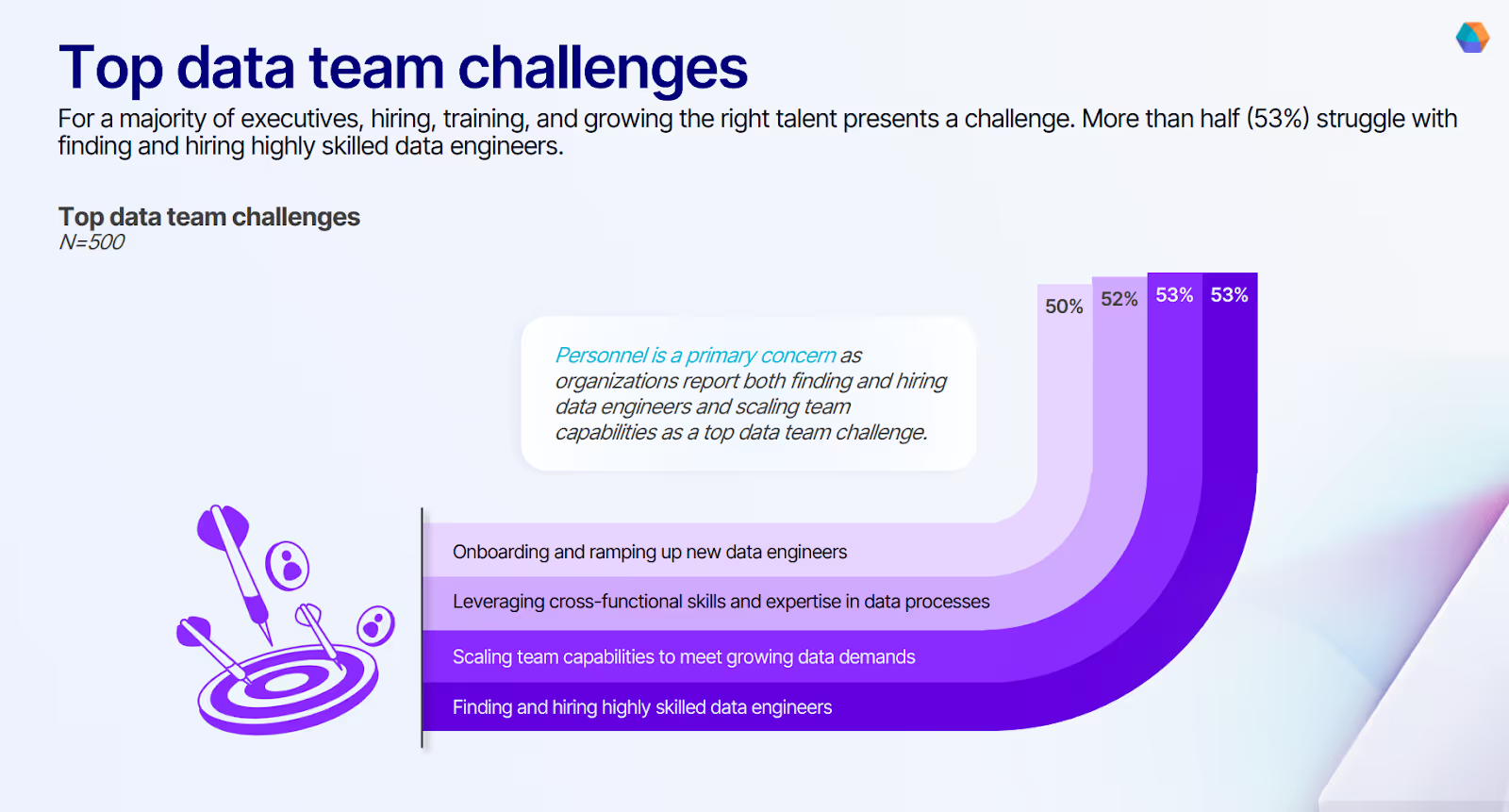

While these optimization techniques are powerful, they require specialized knowledge and ongoing maintenance. According to our survey, a majority of executives face significant talent challenges, with more than half (53%) struggling to find and hire highly skilled data engineers.

Modern data integration platforms are now simplifying and automating many of these practices through visual interfaces, addressing this talent gap.

Here’s how Prophecy's AI-powered, visual, low-code platform transforms data operations in Databricks:

- Visual pipeline development with code generation: Prophecy allows you to visually design data pipelines that automatically generate high-quality, tested code, enabling both technical and non-technical team members to contribute to the development process while maintaining code quality and consistency.

- Git integration for version control: Prophecy integrates with Git, enabling proper version control, branching, and merging of your pipelines while abstracting the complexity for less technical users, ensuring you can apply software engineering best practices to data engineering.

- Metadata-driven testing framework: Prophecy provides comprehensive testing capabilities directly in the visual interface, allowing you to create unit and integration tests without writing code, dramatically improving pipeline reliability while making testing accessible to the entire team.

- Continuous integration automation: Prophecy automatically triggers tests and validations when code changes are pushed, providing immediate feedback on quality and reducing the lengthy startup times typically associated with complex Databricks pipelines.

To overcome the challenges of overloaded data engineering teams and unlock the full potential of your data, explore 4 data engineering pitfalls and how to avoid them to accelerate innovation through low-code approaches that maintain engineering best practices.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now