A Modern Guide to ETL (Extract, Transform, Load) for Data Leaders

Discover how modern ETL powers data extraction, transformation, and loading. Gain insights on real-time processing, cloud-native tools, and business value.

Organizations today are drowning in data yet starving for insights. The explosion of data sources and volumes has created vast digital reservoirs across enterprises, but traditional data integration approaches struggle to transform this raw information into actionable intelligence fast enough to drive critical business decisions.

The solution isn't simply updating existing technologies—it requires fundamentally reimagining how data flows through your organization. Modern ETL demands a shift from rigid, technically complex processes to more agile, democratized approaches that balance self-service capabilities with enterprise governance.

In this article, we'll explore the evolution of ETL from its traditional roots to modern implementations, examine key differences between ETL and ELT, compare various integration methods, and provide practical guidance for building an effective data transformation strategy that empowers both technical and business users.

What is ETL (Extract, Transform, Load)?

ETL (Extract, Transform, Load) is a data integration process that combines information from multiple sources into a single, consistent data store that feeds into your data warehouse, data lakes, data lakehouses, databases, or storage system. It is the backbone of business intelligence, helping organizations gain meaningful insights by standardizing different data formats into a unified structure for analysis.

At its heart, ETL connects scattered, inconsistent data sources to actionable business insights. By transforming raw data into something usable, ETL pipelines let business users query, analyze, and make decisions based on complete, accurate information instead of fragmented data points.

The evolution of ETL

ETL began in the 1970s when companies started moving data between early database systems. Back then, it was mostly manual work requiring specialized programming skills, limited by the mainframe computers of the era.

The 1990s client-server revolution brought dedicated ETL tools with graphical interfaces and better capabilities. This era saw data warehousing concepts emerge, requiring robust ETL processes to centralize company data.

The big data boom of the 2000s changed everything as organizations needed to process unprecedented volumes of structured and unstructured data. The old batch-oriented methods expanded to include real-time processing to satisfy the growing hunger for timely insights.

Yet, despite these advancements, in one real-world example outlined by Databricks' Soham Bhatt, a team spent an entire month (two development sprints) trying to reverse engineer a single legacy ETL pipeline that loaded a customer dimension. The original code was developed five to 10 years prior with no maintained documentation, illustrating the ongoing challenges of legacy ETL systems.

These documentation and reverse engineering challenges represent a common theme across enterprises still relying on traditional ETL processes. As Bhatt further notes, many organizations want to become data and AI-driven companies but struggle when they "get yet another technology for GenAI, yet another technology for ML" without properly planning their data architecture.

This fragmented approach explains why "eighty-five percent of machine learning AI projects are still not in production" - they're built on ETL foundations that weren't designed for today's data volumes and variety.

Cloud computing marks the latest stage in addressing these fundamental challenges, where ETL tools have become cloud-native services offering scalability, pay-as-you-go pricing, and fewer infrastructure headaches. Today's ETL platforms integrate AI capabilities and support streaming data, enabling more sophisticated integration patterns.

ETL vs. ELT

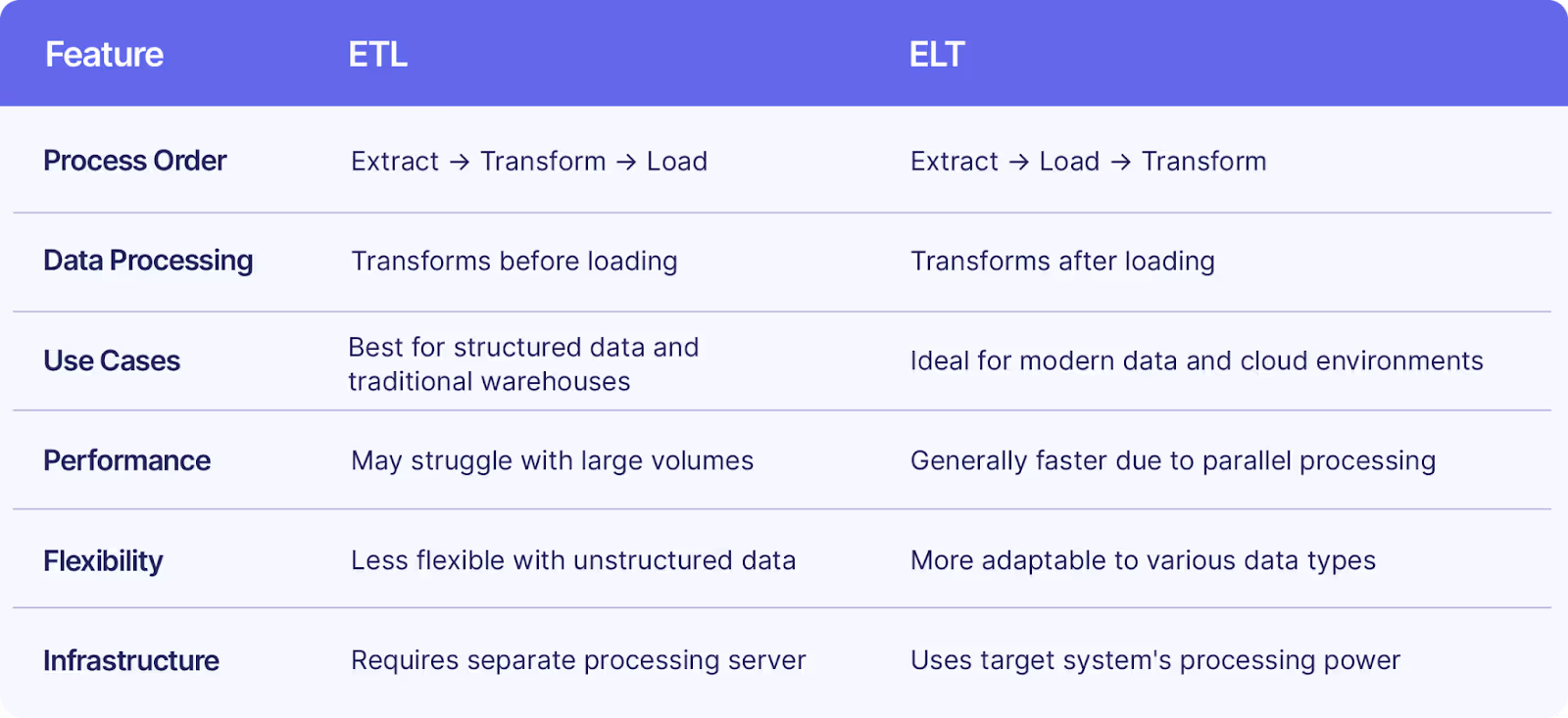

Traditional ETL and modern ELT (Extract, Load, Transform) both integrate data from different sources, but they differ in how and when transformations happen:

In ETL, data transforms before loading into the target system. You extract data from sources, process it in a staging area to meet business rules, then load it into the destination. This works well with limited data volumes or when transformations are complex.

ELT flips this sequence by loading raw data into the target system first, then performing transformations there. This approach uses the computing power of modern cloud platforms like Databricks, eliminating separate transformation servers.

ETL requires dedicated server infrastructure for the transformation phase, while ELT uses scalable cloud resources. ETL gives you better control over data quality before loading, while ELT offers more flexibility for iterative transformations and exploration.

Choosing between technologies like Apache Spark™ and Snowflake can significantly impact your ETL or ELT strategies, depending on your organization's needs. Smart organizations often use hybrid approaches, choosing ETL or ELT based on specific use cases, data volumes, and transformation complexity.

The benefits of modern ETL processes

Modern ETL systems deliver major advantages for organizations looking to maximize their data value:

- Improved data quality: Modern ETL standardizes formats, removes duplicates, and validates information against business rules. This ensures your analytics and reporting rest on accurate, reliable data, reducing the risk of bad business decisions.

- Centralized data repositories: By bringing data from diverse sources into a unified destination, ETL creates a single source of truth. This eliminates information silos and provides consistent data access across departments, improving teamwork and coordination.

- Enhanced decision-making capabilities: With properly integrated and transformed data, business users can access comprehensive information for smarter decisions. Modern ETL combines previously disconnected data points, revealing insights and patterns hidden in isolated systems.

- Greater scalability: Modern cloud-based ETL tools handle growing data volumes without big infrastructure investments. As your data needs grow, these systems automatically scale to handle larger workloads, maintaining performance without manual intervention.

- Real-time data processing: Today's ETL systems support streaming data integration, enabling near-real-time insights for time-sensitive operations. This lets you respond quickly to changing conditions instead of waiting for batch processing to finish.

- Reduced maintenance burden: Cloud-native ETL services eliminate the need to maintain complex on-premise infrastructure, freeing IT resources for higher-value work. These platforms handle patching, upgrades, and scaling automatically, cutting operational overhead.

- Better regulatory compliance: Well-designed ETL processes can enforce data governance policies, maintain audit trails, and implement data protection measures, helping you meet regulations like GDPR or HIPAA.

- Improved development tools: Modern ETL platforms provide enhanced development environments that address the limitations of current Spark IDEs, making it easier for developers to build and maintain data pipelines efficiently.

Steps in the ETL process

The ETL workflow systematically moves and prepares data from source systems to a target repository. This involves three sequential stages: extraction, transformation, and loading.

What is extraction?

Extraction is the first ETL process that collects data from various source systems. This crucial first step identifies and captures the data needed for analysis from databases, applications, files, APIs, and other repositories.

The extraction process typically follows three main approaches. Full extraction copies all source data each time the ETL process runs, suitable for smaller datasets that change frequently. Incremental extraction identifies and processes only data changed since the last extraction, saving time and resources for larger datasets.

Change Data Capture (CDC) represents the most sophisticated extraction method, constantly monitoring source systems for data changes and capturing them in real-time. CDC minimizes processing overhead and enables near-real-time data integration, making it valuable for time-sensitive operations requiring current information.

What is transformation?

Transformation is the middle stage where raw extracted data converts into a format suitable for analysis and business use. This phase applies business rules, data quality standards, and structural changes to prepare data for its destination.

Common transformation operations include filtering unwanted records, sorting data into specific sequences, aggregating individual records into summaries, and joining related data from different sources. Additional transformations might include data type conversions, calculations, validation against business rules, or enrichment with supplementary information.

The transformation phase is typically the most complex and computationally intensive part of ETL, often requiring significant business logic and data manipulation. It's also where the most value emerges, as raw data becomes meaningful information that supports analysis, reporting, and decision-making.

What is loading?

Loading is the final ETL phase where transformed data writes into the target destination system. This destination is typically a data warehouse, data mart, database, or increasingly, a cloud data platform that serves as the foundation for business intelligence and analytics.

The loading process can follow several patterns based on business needs. Batch loading processes data in groups at scheduled intervals, making sense when real-time access isn't critical. This approach handles large volumes efficiently but introduces some delay between data creation and availability.

Real-time or streaming loading continuously moves small batches of data into the target system with minimal delay. This supports time-sensitive operations requiring current information, though it may need more complex infrastructure and monitoring to maintain performance.

During loading, the ETL process may also create or update indexes, implement constraints, or perform other operations to optimize the target system for analytical queries, ensuring transformed data is not just present but efficiently accessible.

Types of ETL tools

Today's ETL ecosystem covers everything from traditional enterprise systems to cloud-native platforms and open-source alternatives. When choosing an ETL tool, consider your specific data integration requirements, existing technology infrastructure, team capabilities, and long-term scalability needs.

Different tools come with their own advantages and trade-offs, so align your choice with your organization's unique situation.

Open-source ETL tools

Open-source ETL tools offer remarkable flexibility for organizations wanting customizable data integration solutions. Maintained by active developer communities, these tools provide transparency into source code and continuous improvements through community contributions.

The cost advantage is huge—most open-source ETL platforms work without licensing fees, requiring only infrastructure and maintenance investments. For startups and small-to-medium businesses with limited budgets but technical talent, this presents an attractive option for building sophisticated data pipelines.

Community support is another major benefit. Platforms like Apache NiFi, Talend Open Studio, and Apache Airflow have extensive knowledge bases, forums, and documentation resources. When problems arise, these communities often provide faster troubleshooting and implementation guidance than traditional vendor support.

The trade-off comes with complexity. Open-source tools typically require greater technical expertise to deploy and maintain effectively. You must evaluate whether you have sufficient in-house skills or are willing to invest in developing these capabilities to get the full potential from open-source ETL options.

Legacy enterprise ETL platforms

Legacy enterprise ETL platforms remain central to many large organizations' data ecosystems. These established solutions, developed over decades, offer robust capabilities for processing structured data within traditional data warehouse environments.

These platforms excel with well-defined, consistent data structures through tested, reliable processes. Their maturity provides comprehensive documentation, established best practices, and predictable performance for complex transformations. Many organizations have invested heavily in these systems, building intricate pipelines that power critical business operations.

However, legacy platforms often struggle with the flexibility needed for modern data sources. Their design predates the explosion of semi-structured and unstructured data, making them less suitable for processing formats like JSON, XML, or streaming data without significant customization. They also typically require substantial upfront licensing costs and ongoing maintenance investments.

Modernization paths exist for organizations deeply invested in legacy ETL tools. Many vendors offer cloud extensions, API connectivity, and modular approaches that help bridge traditional platforms with newer data sources and destinations, creating hybrid architectures that preserve existing investments while enabling future growth.

Modern cloud-native ETL solutions

Modern cloud-native ETL solutions represent the latest evolution in data integration technology, designed specifically for cloud environments and contemporary data needs. These platforms use cloud architecture to deliver unprecedented scalability, flexibility, and performance.

Cloud-native solutions like Databricks serverless compute can achieve up to 98% cost savings compared to traditional approaches through pay-as-you-go pricing models and auto-scaling capabilities. They eliminate the need for overprovisioning infrastructure by automatically adjusting resources based on actual processing demands, whether handling routine workloads or unexpected data volume spikes.

A fundamental shift with ETL tools is the transition from traditional ETL to ELT (Extract, Load, Transform) processing. This approach uses the computing power of modern data warehouses and lakes to transform data after loading, enabling greater flexibility and reducing pipeline complexity.

These platforms also excel at handling diverse data types—from structured database records to semi-structured JSON, unstructured text, and real-time streams. Their flexible processing capabilities make them ideal for organizations dealing with varied data sources or those pursuing advanced analytics and machine learning initiatives that require comprehensive data integration.

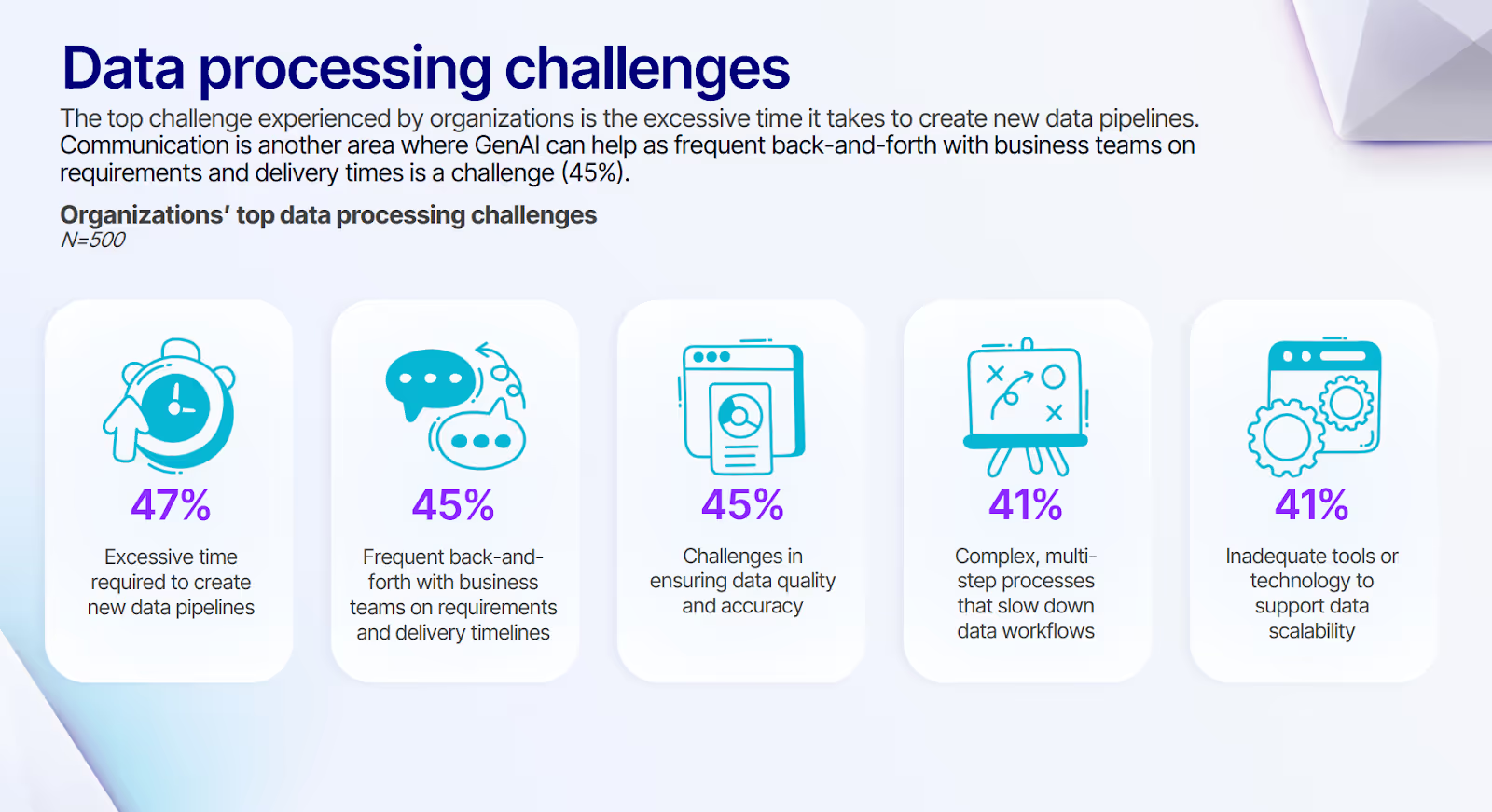

This is especially critical as excessive time spent building new data pipelines remains a significant challenge for 47% of data teams, underscoring the need for more streamlined solutions to data integration.

An example of these solutions is cloud-native data engineering platforms that leverage the benefits of the cloud to deliver scalable and efficient ETL processes. In addition, services like Databricks Partner Connect provide integrated solutions for modern ETL, enabling seamless data engineering workflows.

ETL vs. other data integration methods

Data integration isn't one-size-fits-all. ETL (Extract, Transform, Load) and its variant ELT are powerful approaches, but they exist alongside several other integration methods. Understanding the differences between these approaches helps you select the right solution for your specific business needs and technical requirements.

Let's explore how ETL compares to other major data integration approaches, examining their strengths, limitations, and ideal use cases.

ETL vs. data virtualization

Data virtualization creates a logical abstraction layer that provides unified access to data without physically moving or copying it. This contrasts with ETL, which extracts data from sources, transforms it, and loads it into a destination system.

While ETL processes consolidate data into a central repository like a data warehouse, data virtualization leaves data in its original location and creates a virtual layer that connects to and queries source systems on demand. This approach eliminates data duplication and reduces storage costs, but it introduces dependencies on source system availability and performance.

ETL excels in analytical workloads requiring historical analysis and predictable performance, as transformed data is stored in optimized formats for querying. Virtualization shines when real-time access to operational data is needed, regulatory requirements limit data movement, or when rapid prototyping of data models is desired.

The choice between ETL and virtualization often comes down to latency requirements, query performance needs, and whether you're building a system of record versus enabling quick access to operational data.

ETL vs. API-based integration

While virtualization provides a logical data layer, API-based integration takes a different approach by enabling direct communication between applications through standardized interfaces. API integration focuses on real-time application-to-application communication, whereas ETL is optimized for collecting, transforming, and consolidating data for analytical purposes.

API-based integration methods like Enterprise Service Buses (ESBs) and Integration Platform as a Service (iPaaS) solutions excel at process automation, microservices orchestration, and enabling real-time data flows between operational systems. Modern ETL, on the other hand, is designed for data consolidation, cleansing, and preparation for analysis.

Modern organizations often employ both approaches complementarily. APIs might handle real-time transactions and operational integrations, while ETL processes aggregate and transform that same data for historical analysis, reporting, and machine learning.

The decision between API integration and ETL depends largely on whether you need real-time operational data exchange or consolidated analytical datasets.

ETL vs. data replication

Unlike API integration, which focuses on real-time communication, data replication focuses on creating and maintaining exact copies of data across multiple systems. Where ETL transforms data to make it suitable for analytical purposes, replication prioritizes creating identical copies of source data with minimal transformations.

Replication excels in disaster recovery scenarios, distributing read workloads across multiple databases, and maintaining synchronized copies of operational data. It aims to minimize latency between changes in the source and target systems.

Modern ETL, by contrast, is purpose-built for transforming raw data into formats optimized for analysis, often involving complex business rules, data quality processes, and dimensional modeling.

Many modern data architectures combine these approaches. For example, an organization might use replication to create a backup of operational databases, then apply ETL processes to transform that replicated data into structures suitable for business intelligence tools.

The choice between ETL and replication typically depends on whether you need transformed data for analysis or identical copies for operational resilience and load balancing.

ETL use cases across industries

ETL processes form the backbone of data-driven decision making across virtually every industry. Let’s see some examples of how different sectors are applying ETL approaches to solve their unique challenges and transform raw data into business value.

Financial services data integration

Financial institutions handle massive volumes of data from trading platforms, customer systems, and market feeds. Traditional ETL processes created bottlenecks as institutions struggled to consolidate this data for regulatory reporting, risk assessment, and customer analytics in a timely manner.

Modern ELT approaches now allow financial firms to load raw data directly into cloud data warehouses before transformation. This shift enables them to meet stringent compliance requirements while simultaneously extracting customer insights for personalized service offerings and fraud detection.

Financial organizations using low-code tools empower business analysts to create their own data pipelines without dependency on IT. This democratization accelerates time-to-insight while maintaining governance guardrails that ensure regulatory compliance with frameworks like GDPR and Basel III.

Healthcare and pharmaceutical data pipelines

Healthcare organizations face unique ETL challenges with strictly regulated patient data across disparate systems—from electronic health records to insurance claims and clinical trials. Traditional batch-oriented ETL processes couldn't deliver the real-time insights needed for critical care decisions.

Modern ELT platforms now enable healthcare providers to securely process patient data with built-in anonymization and encryption. These platforms maintain HIPAA compliance while creating unified patient views that improve diagnostic accuracy and treatment outcomes through more comprehensive data analysis.

Cloud-based ELT approaches also allow them to process terabytes of genomic data and real-world evidence, accelerating drug discovery while maintaining stringent quality controls required by regulatory bodies.

Asset management data operations

Asset management firms rely on processes to consolidate financial data from global markets, portfolio systems, and client databases. These firms need to transform and normalize data from hundreds of sources to support investment decisions, risk analysis, and client reporting.

Traditional ETL created integration bottlenecks that delayed critical analyses during market volatility. Modern cloud-based ELT approaches now enable asset managers to process market movements in near real-time, providing portfolio managers with timely insights for rapid position adjustments.

Self-service data tools also allow investment analysts to build their own data pipelines without coding expertise. This democratization accelerates insight generation while maintaining strict governance standards critical in regulated financial environments, creating a balance between agility and compliance.

The next step in ETL

Modern ETL platforms solve a critical business challenge: enabling non-technical users to access and transform data independently while maintaining organizational control. By embedding governance mechanisms directly into intuitive interfaces, these solutions create guardrails that protect data integrity without blocking accessibility.

Visual interfaces with drag-and-drop components allow business users to build data pipelines without coding expertise. Many platforms now integrate AI assistants that can translate natural language requests into data transformations, making complex operations accessible to domain experts across departments. Pre-built templates accelerate common workflows while enforcing standardized approaches.

Effective governance in self-service ETL maintains security through role-based access controls, ensuring users only interact with appropriate data assets. Quality controls automatically validate transformations, preventing downstream issues before they occur. Cost management features monitor resource usage, preventing runaway expenses as adoption grows.

Enhancing the collaboration between data engineers and business users is key to achieving this balance. By implementing the right modern ETL platform with thoughtful governance policies, you create data environments where business users can move quickly while maintaining the guardrails necessary for enterprise security, compliance, and cost control.

It's also important to balance self-service analytics and compliance effectively to ensure that democratization of data does not compromise security. Furthermore, reducing IT dependency is crucial for agility. Strategies for reducing IT dependency can empower businesses to respond faster to market demands.

Enabling self-service analytics with governance

The ETL landscape is being reshaped by powerful technologies that promise to revolutionize how organizations handle data integration. As industry analyst Hyun Park points out, modern data transformation has evolved far beyond "simply building the initial transformation pipelines and workflows that we all grew up with ten, fifteen, twenty years ago," now addressing the complexities of semi-structured and unstructured data that traditional approaches weren't designed to handle.

Artificial intelligence and machine learning are now automating traditionally manual tasks, with platforms like Prophecy's Data Copilot allowing you to describe transformations in natural language and generating the necessary code automatically.

The democratization of data access through low-code data transformation platforms represents another significant shift. These tools empower business users to create and manage their own data pipelines without technical expertise, reducing dependency on IT departments and accelerating time-to-insight. The need for ongoing learning in data engineering is also recognized, with data engineering training programs becoming increasingly important to keep skills current.

Integration with modern data ecosystems is becoming seamless as modern ETL tools develop deeper connections with data lakes, streaming platforms, and machine learning frameworks. This connectivity creates opportunities for more sophisticated analytics and real-time decision making across previously siloed systems.

As organizations face growing data volumes and diversity, the modern ETL approach will continue evolving toward more intelligent, accessible solutions that balance governance with flexibility. The future belongs to platforms that can deliver both power and simplicity, enabling you to transform your data assets into competitive advantages with unprecedented speed and efficiency.

Considering AI strategies such as prompt engineering vs. fine-tuning can also guide how enterprises implement generative AI in data transformation. Platforms like the Prophecy Generative AI platform demonstrate how rapid application development with AI is becoming a reality.

Power your ETL with visual self-service tools

Modern visual data pipeline platforms are democratizing access to data engineering by providing powerful self-service capabilities while maintaining enterprise-grade governance.

Platforms like Prophecy exemplify this new generation of tools that combine visual development environments with AI-powered assistance. Instead of requiring engineers to code every transformation, Prophecy enables a broader range of users to contribute to data pipelines through visual, drag-and-drop interfaces.

Here is how Prophecy balances accessibility with governance:

- Visual pipeline building with intuitive drag-and-drop interfaces that generate optimized, production-ready code behind the scenes

- AI-assisted development that boosts productivity, making low-code the default method for building enterprise pipelines

- Built-in governance mechanisms including data lineage tracking, role-based access controls, and policy enforcement

- Real-time error detection with AI-powered suggestions to fix issues before they impact production

- Enterprise scalability that allows you to build and maintain thousands of pipelines while enforcing standards

To break through the bottlenecks caused by traditional ETL processes that slow data access and limit AI innovation, explore The Death of Traditional ETL whitepaper to discover modern pipeline patterns that accelerate self-service analytics and AI adoption.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar