Escape the Legacy ETL Trap and Embrace Modern Pipelines That Deliver

Legacy ETL pipelines can't handle today's AI demands. This guide explains modern approaches, compares ETL vs. ELT architectures, and provides a practical roadmap for modernizing your data transformation strategy.

This article was refreshed on 05/2025.

ETL (Extract, Transform, Load) pipelines form the foundation of business intelligence and analytics initiatives across industries. And they're more relevant than ever.

The challenges facing data teams are intensifying with unprecedented growth in data volume, variety, and velocity. Organizations now manage petabytes of information spanning structured databases to unstructured content, all while facing demands for increasingly real-time insights.

This pressure is driving a fundamental evolution in how we approach data transformation.

Modern ETL pipelines have evolved significantly, moving from proprietary on-premise systems to cloud-native architectures, open-source frameworks, and AI-assisted development.

Let's explore the fundamentals of ETL pipelines, examine their types and benefits, compare approaches, and highlight the emerging trends reshaping data integration for the AI era.

What is an ETL pipeline?

An ETL pipeline is a sequence of processes that extract data from multiple sources, transform it according to business requirements, and load it into a target system where it can be analyzed and utilized for business insights.

It's the fundamental architecture behind data integration efforts, enabling organizations to consolidate disparate data into usable, valuable information.

At its core, an ETL pipeline automates the collection, processing, and delivery of data, handling the complex task of moving information between systems while ensuring its quality and consistency. These pipelines are critical connectors in the modern data ecosystem, bridging the gap between raw data and actionable intelligence.

Here is a diagram of an ETL pipeline process:

While the ETL concept has been a cornerstone of business intelligence for decades, today's implementations differ dramatically from their predecessors. Modern ETL pipelines must handle exponentially larger data volumes, accommodate diverse data types (from structured database records to unstructured text and images), and meet increasingly stringent requirements for speed and reliability.

Contemporary ETL pipelines often leverage cloud computing, distributed processing frameworks, and sophisticated orchestration tools to achieve scalability and performance that would have been impossible with legacy systems.

This evolution reflects the growing strategic importance of data in driving business decisions, powering analytics, and enabling AI applications.

ETL pipeline stages

While modern implementations may introduce variations and optimizations, the fundamental Extract-Transform-Load workflow remains the cornerstone of effective data integration.

Let's explore each stage in detail to understand how raw data becomes analytics-ready information.

Extract

The extraction phase pulls data from multiple disparate sources while minimizing disruption to the original systems. Modern ETL pipelines can connect to virtually any data source, including relational databases (SQL Server, Oracle, MySQL), APIs, SaaS platforms like Salesforce and HubSpot, IoT devices sending telemetry data, and cloud storage solutions.

Today's extraction processes are designed to handle not just structured data in neat tables, but also semi-structured formats like JSON and XML, and even unstructured data like emails, documents, and social media content.

This versatility is crucial as organizations increasingly need to analyze diverse data types to gain comprehensive business insights. Employing advanced data preparation techniques at the extraction phase enhances the quality and usability of the data.

Extraction mechanisms must navigate various connection protocols, authentication methods, and rate limits. Change Data Capture (CDC) techniques allow ETL systems to identify and extract only new or modified records since the last extraction, significantly reducing processing overhead and source system impact.

Modern extraction also employs parallelization to handle high-volume sources efficiently while maintaining precise logs for auditing and troubleshooting.

Transform

The transformation stage is typically the most complex and computationally intensive part of an ETL pipeline. Raw data undergoes cleaning operations to handle missing values, remove duplicates, standardize formats, and correct errors. Data validation rules ensure that information meets quality thresholds before proceeding.

Transformations often involve sophisticated operations like joining data from multiple sources (e.g., combining customer records with transaction history), aggregating values (calculating sums, averages, or percentiles), deriving new columns through calculations or business logic, and applying complex rules that reflect organizational policies or regulatory requirements.

Modern ETL systems increasingly leverage AI to enhance performance in ETL transformation processes. Machine learning algorithms can auto-detect schema anomalies, suggest optimal data mappings, identify data quality issues, and even predict transformation needs based on patterns in the data.

In healthcare settings, for example, AI-driven ETL can standardize diverse medical coding systems or normalize patient records from different providers.

Load

The final stage delivers the processed data to a target system where it becomes immediately available for analysis and business intelligence. These destinations include data warehouses, data lakes, or specialized analytics platforms for specific use cases.

Loading approaches vary based on business requirements. Traditional batch loading processes data in scheduled intervals (nightly, weekly), while micro-batch approaches process smaller chunks more frequently.

Real-time streaming loads enable near-instantaneous data availability for time-sensitive applications like fraud detection or real-time dashboards.

Modern loading mechanisms support scalable, parallel operations that distribute the workload across multiple compute resources to handle massive datasets efficiently.

They also maintain detailed metadata about each load, including timestamps, record counts, and success metrics, which are crucial for governance and troubleshooting.

Sophisticated systems can perform "upserts" that update existing records and insert new ones in a single operation, maintaining data consistency while minimizing processing overhead.

Types of ETL Pipelines

ETL pipelines come in different formats designed to serve varying business requirements. The timing and frequency of data processing are critical factors that distinguish these pipelines from one another.

- Batch Processing - Batch processing collects data over time and processes it at scheduled intervals, typically during off-hours when system load is minimal. Modern implementations leverage distributed computing frameworks like Apache Spark to complete in minutes what once took days, maintaining cost-efficiency while significantly improving performance.

- Real-time processing - Real-time ETL processes data immediately upon creation, maintaining continuous pipelines that transform and deliver insights within seconds or milliseconds. Technologies like Apache Kafka and Flink provide the streaming backbone, supporting sophisticated operations while handling massive throughput with minimal latency.

Choosing the right type of ETL pipeline depends on the specific business needs and the nature of the data being processed, as well as the evaluation strategies used to assess data quality and pipeline performance.

Why ETL pipelines matter

ETL pipelines are the backbone of strategic data initiatives that drive competitive advantage. By systematically extracting, transforming, and loading data from disparate sources, these pipelines enable organizations to convert raw information into actionable intelligence that informs critical business decisions.

As data becomes increasingly central to business strategy, properly designed ETL processes have evolved from back-office IT concerns into mission-critical assets that directly impact an organization's ability to innovate, respond to market changes, and serve customers effectively.

Data integration and centralization

ETL pipelines are critical connectors that bring together data from across the enterprise into a unified, consistent format. This centralization breaks down organizational data silos that traditionally keep valuable information trapped within departmental boundaries.

By extracting data from disparate systems – from legacy databases to modern SaaS applications – and integrating it into a coherent whole, ETL provides decision-makers with a comprehensive view of business operations.

This integration is powerful because it standardizes inconsistent data formats, resolves conflicts between different sources, and creates a single source of truth that stakeholders can trust, ensuring data integrity in AI systems.

For example, customer information scattered across CRM systems, order management platforms, and support ticketing tools can be unified to create complete customer profiles that drive more effective engagement strategies.

In enterprise environments, ETL processes typically manage hundreds of tables and process millions of rows of data that change daily. This centralized approach enables organizations to support thousands of users with production dashboards and reports.

By bringing together data from disparate sources, companies create unified views of business performance that support better decision-making from operational teams to executive leadership.

Without proper ETL pipelines, organizations struggle with fragmented, inconsistent data that leads to contradictory reports, duplicated efforts, and missed opportunities.

Efficiency and scalability

ETL pipelines deliver significant efficiency gains by automating the complex processes required to make data usable for business purposes. Instead of manual data manipulation, which is time-consuming, error-prone, and difficult to reproduce, ETL solutions provide repeatable, consistent data processing that scales as business needs evolve.

Implementing CI pipelines for AI principles can further enhance automation and reduce errors in ETL processes.

As data volumes grow exponentially, modern ETL approaches built on cloud infrastructure can scale elastically to handle increasing workloads. This elasticity allows organizations to process terabytes or even petabytes of data without the performance degradation that would occur in traditional environments.

When seasonal peaks or special projects require additional processing power, cloud-based ETL solutions can automatically provision more resources and scale back when demand decreases, optimizing performance and cost.

Monitoring key metrics is essential for optimizing ETL systems and ensuring efficiency and scalability.

Organizations that modernize their ETL processes often see dramatic improvements in operational efficiency. For instance, companies that move from legacy ETL tools to cloud-native solutions typically report workflow simplification, with complex procedures reduced from dozens of steps to single-digit processes.

This streamlining not only accelerates development but also reduces the potential for errors and bottlenecks.

The efficiency gains from modern ETL solutions directly translate to business agility. With faster data processing cycles, organizations can increase the frequency of key reports from daily to multiple times per day, providing more timely insights.

For data professionals, these efficiency improvements mean less time spent on routine data preparation and more time available for high-value analysis that drives business outcomes.

Enabling advanced analytics

ETL pipelines form the critical foundation for advanced analytics, machine learning, and artificial intelligence initiatives by ensuring that data is clean, consistent, and properly structured for sophisticated modeling.

While raw data is rarely suitable for immediate analysis, properly transformed data enables faster, more accurate insights that drive business value.

Clean, transformed data is essential for building reliable predictive models. Machine learning algorithms can only produce trustworthy results when trained on high-quality data that has been through rigorous ETL processes to handle outliers, normalize formats, and fill gaps.

Even the most sophisticated AI systems will generate misleading outputs when fed inconsistent or error-filled data, making robust ETL pipelines a prerequisite for successful advanced analytics. Additionally, ensuring AI reliability through monitoring is vital in these processes.

Effective AI model evaluation metrics are necessary to monitor and assess the performance of these models within ETL processes.

Modern ETL pipelines increasingly incorporate AI-ready features directly into data workflows. This includes creating feature stores that maintain consistent model inputs, generating vector embeddings for text analysis, and structuring data in formats optimized for machine learning frameworks.

By embedding these capabilities within ETL processes, organizations can significantly accelerate their path from raw data to AI-powered insights.

Organizations with well-designed ETL pipelines find it substantially easier to integrate language models and other AI capabilities with their existing data.

When data is already centralized, cleaned, and properly structured in modern cloud platforms, the development of AI/ML applications becomes much faster. This integration enables powerful use cases like natural language querying of business data, automatic document classification, and sentiment analysis of customer interactions.

By establishing robust data transformation pipelines, companies build the necessary infrastructure to move beyond basic reporting into predictive and prescriptive analytics that drive innovation and business transformation.

ETL pipelines vs. data pipelines

Today's data ecosystem encompasses various methodologies for transferring, transforming, and storing information, each with distinct advantages for specific use cases. ETL remains valuable for many scenarios, but alternatives like ELT (Extract, Load, Transform) and ELTL (Extract, Load, Transform, Load) have gained prominence as organizations face new challenges with diverse data types and advanced analytics requirements.

Understanding the differences between these approaches is crucial for designing data architectures that effectively support both traditional business intelligence and emerging AI applications, and facilitate effective data integration.

What are data pipelines?

Data pipelines are automated processes that move data from source to destination systems, orchestrating the flow of information throughout an organization's technical infrastructure.

These broader frameworks encompass ETL as one specific pattern but include various approaches designed for different processing requirements:

- ETL (Extract, Transform, Load) extracts data from sources, transforms it during transit, and loads the processed results into target systems.

- ELT (Extract, Load, Transform) moves raw data directly into the target system first, then leverages its processing power for transformation.

- ELTL (Extract, Load, Transform, Load) loads data into a staging area, performs transformations there, then loads the processed results into specialized storage.

- Streaming pipelines process data continuously in real-time without batch accumulation, enabling immediate analysis and action.

- Change Data Capture (CDC) pipelines identify and propagate only data that has changed since the last extraction, minimizing processing overhead.

Differences between ETL and data pipelines

How to build a modern ETL pipeline

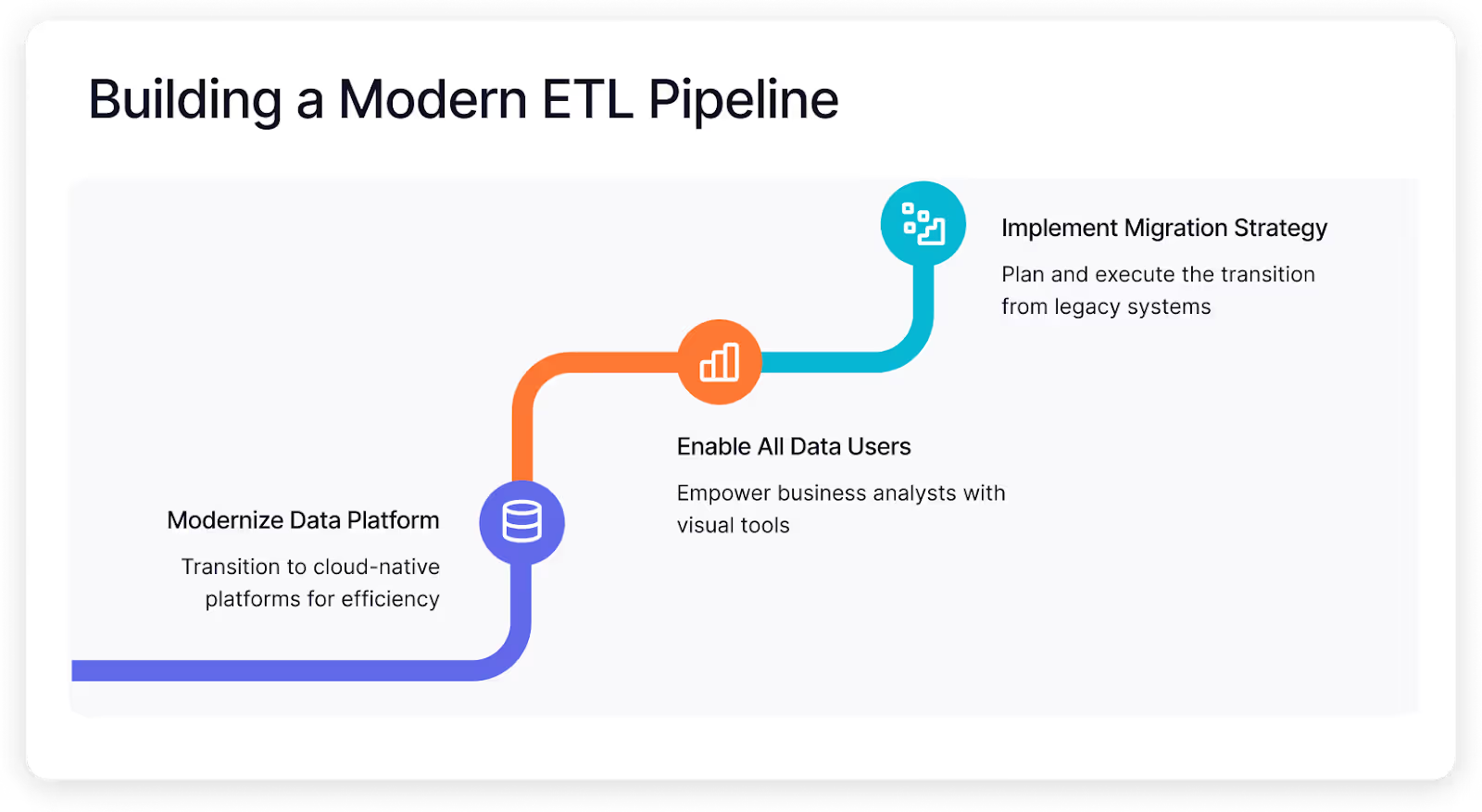

Building effective ETL pipelines requires three core strategies: modernizing your data platform, enabling all data users, and implementing a structured migration approach. Each element addresses specific challenges in data transformation while creating a foundation for both traditional analytics and AI applications.

This practical guide outlines actionable steps for organizations seeking to overcome data bottlenecks and maximize the value of their information assets.

Step one: Modernize your data platform

Moving from legacy ETL to cloud-native platforms is step one in modernization. Traditional ETL creates bottlenecks. Data gets stuck on intermediate servers with limited processing power. Cloud platforms like Databricks change this completely.

The key shift? Moving from ETL to ELT (Extract, Load, Transform). Load your raw data first, then transform it using the platform's massive computing power. This simple change delivers dramatic performance improvements.

Cloud platforms handle all data types efficiently—structured database records, JSON files, documents, images, and audio. This versatility supports both traditional analytics and modern AI applications in one system.

Cost structures improve too. Instead of expensive servers sitting idle between batch jobs, cloud platforms let you pay only for what you use. Need more processing power for month-end reporting? Scale up. Quiet period? Scale down.

Governance gets better, not worse. Modern platforms provide unified security, comprehensive auditing, and centralized metadata management. You gain control while increasing flexibility.

Step two: Enable all data users

Breaking through the data transformation bottleneck means getting more people involved in the process. Modern ETL tools use visual interfaces that make data preparation accessible to business analysts, not just specialized engineers.

Drag-and-drop pipeline builders let users see data flowing through transformations without writing code. This visual approach makes complex operations like joins, aggregations, and filtering intuitive for anyone who understands the data.

AI assistance dramatically accelerates development. Data transformation copilots can now generate entire pipelines from simple text descriptions, suggest optimal transformations, and even fix errors automatically. They're like having an expert looking over your shoulder, making recommendations in real time.

This democratization reduces the backlog that plagues data engineering teams. When business users can create their own data pipelines, they don't wait weeks for their requests to reach the top of the priority list.

The best tools maintain a "single source of truth" by generating high-quality code that works directly with your cloud platform. Everything happens on the same infrastructure, following the same standards, with full visibility into data lineage.

Visual interfaces also make data lineage understandable for everyone. Users can see exactly where data comes from and how it's transformed at each step, building trust in the final results.

For technical users who prefer coding, modern platforms still provide code-based interfaces that generate the same underlying assets. This flexibility ensures business and technical users can work together productively on the same platform.

Implement a migration strategy

Moving from legacy ETL isn't about flipping a switch. You need a plan.

First, take inventory.

- Which pipelines matter most?

- Which ones run daily?

- Start with these high-value workflows.

Get your data connections ready. Make sure your cloud platform can access all your source systems. This prep work saves headaches later.

Use automation to convert your old code. Modern tools can transform Informatica, Alteryx, or custom SQL into cloud-ready code automatically. This cuts conversion work significantly.

Once converted, make your pipelines cloud-smart. Break up monolithic processes, add parallelization, and use native cloud features that make everything run faster.

Put everything in Git. Version control isn't optional anymore. It tracks changes, enables collaboration, and powers your deployment pipeline.

Test thoroughly before switching. Run old and new systems side by side. Compare results. Set up automated tests to catch issues early.

Pick a cutoff date and stick to it. Running two systems in parallel creates confusion. Make a clean break when you're confident in the new system.

Train your team, but don't worry too much. Modern visual interfaces are intuitive, and most people adapt quickly.

Remember, this isn't just about copying old workflows. It's your chance to build something better. Add governance, improve performance, and create a system that scales with your needs.

Unlock ETL modernization with Prophecy

Modern ETL pipelines are essential for unleashing your data's full potential in the AI era, and Prophecy provides the ideal platform to build and manage these critical data workflows.

- Development and Automation: Prophecy's AI suggests transformations, generates quality code, and provides real-time error detection, streamlining pipeline creation while ensuring code quality.

- Collaboration and Governance: Built-in features for version control, lineage tracking, access controls, and policy enforcement support compliance while enabling seamless collaboration.

- Extensibility and Reusability: Data engineers create reusable templates and custom components that analysts leverage to standardize operations while maintaining flexibility for custom logic.

- Automated Testing and Observability: The platform automates testing, validation, and deployment while providing comprehensive monitoring and surfacing runtime errors for quick troubleshooting.

Curious how you can modernize and scale your ETL process? Learn how you can build data pipelines in Databricks in just 5 steps with Prophecy.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now