A Guide to Understanding Structured and Unstructured Data for Data Strategies

Learn how to leverage both structured and unstructured data for competitive advantage. Discover practical integration approaches and technology solutions to effectively manage diverse data types and drive informed decisions.

This article was refreshed on 05/2025.

From tracking every customer transaction to the vast ocean of social media posts, user data, and multimedia files, the variety of data is vast. But with all this data comes a big question: how do we make sense of it all?

Structured and unstructured data represent two fundamental approaches to storing and managing data. Structured data gives us order and predictability—perfect when consistency is key. But unstructured data? That's where hidden insights lie in free-form text, images, videos, and more.

In this article, we explore the key differences, applications, and considerations to help you effectively leverage these data types and drive smarter decisions in your organization.

Defining structured and unstructured data

Both structured and unstructured data store information, sure, but they do it in fundamentally different ways, each tailored to specific tasks and challenges.

What is structured data?

Structured data is information organized in a highly defined manner within databases according to a pre-established model. Imagine it as the neatly labeled shelves in your favorite bookstore. Data sits in tables with rows and columns, following a fixed schema design that tells exactly what goes where. This setup ensures consistency and makes managing relationships between different bits of data simple.

Examples of structured data systems include relational databases like MySQL, PostgreSQL, and Oracle—the classics. They use SQL to manage and query data, letting you do complex things like joins and aggregations. These systems shine where data integrity and speedy access matter most—like transactional systems, reporting tools, and analytics.

The real power of the relational model is to make data both accessible and reliable. With their strict schemas and relationships, structured data systems lay down a solid foundation for operations where precision and consistency are non-negotiable.

Pros of structured data

Structured data offers significant benefits for organizations requiring reliable, high-performance data operations:

- Predictable organization and efficient querying

- Strong data integrity and relationship management

- Optimized for transactions and real-time analytics

- Mature ecosystem of tools and standardized interfaces

- Reliable performance for mission-critical operations

Cons of structured data

Despite its strengths, structured data presents several challenges that organizations must consider:

- Limited flexibility when handling diverse data types

- Schema changes can be complex and disruptive

- Vertical scaling constraints as data volumes grow

- Can be inefficient for storing certain complex data formats

- Often requires significant upfront modeling effort

What is unstructured data?

Unstructured data is information that doesn't conform to a predefined data model or organization method and typically includes text documents, images, videos, social media posts, and other varied formats. Without a predefined schema holding it back, this data type is flexible enough to handle all sorts of formats.

Examples include document stores like MongoDB, key-value stores, and object storage systems. They're built to handle diverse, complex data where old-school schemas just get in the way. So, when you're working with multimedia content or mining social media feeds, the flexibility of unstructured data is a game-changer.

But flexibility has its costs. Unstructured data might not offer the same querying prowess as structured data. Getting meaningful insights can demand advanced tools, especially as your data gets complex. Still, it opens up possibilities in areas like natural language processing, machine learning, and big data analytics.

Pros of unstructured data

Unstructured data provides numerous benefits for organizations dealing with diverse information types:

- Exceptional flexibility to handle diverse data types

- Simple data ingestion without schema constraints

- Natural fit for content-rich applications

- Scales horizontally to manage massive data volumes

- Enables advanced analytics like text mining and sentiment analysis

Cons of unstructured data

Working with unstructured data introduces challenges that may require specialized approaches:

- More complex querying and data retrieval

- Requires specialized tools for effective analysis

- May need additional processing for meaningful insights

- Governance and consistency can be difficult to maintain

- Storage requirements can be substantial

What is semi-structured data?

Semi-structured data is information that contains some organizational properties but doesn't conform to the rigid structure of a relational database. This data type contains tags or markers to separate semantic elements and enforce hierarchies of records and fields within the data.

Common examples include XML, JSON, and CSV files. These formats have a flexible structure that allows for data variations while maintaining some level of organization. For instance, a JSON file storing customer information might have standard fields like name and email. Still, it can easily accommodate additional fields for some customers without changing the entire dataset.

Semi-structured data is particularly valuable in scenarios where data needs some organization for efficient processing but also requires flexibility to handle diverse information. It's widely used in web services, configuration files, and as an intermediate format between fully structured and unstructured systems.

Structured vs unstructured data: five key differences

Let’s see a quick comparison that outlines key distinctions across various dimensions:

Now, let's break down these differences and see how they impact your data storage.

1. Schema, structure, and organization

In structured data, the schema rules the roost. Before any data comes in, you lay out exactly how it's organized—the tables, fields, data types, relationships—the whole nine yards. This planning ahead makes querying efficient and keeps your data clean. Picture customer information stored in a table with specific fields for names, addresses, and contact details.

Unstructured data turns this idea on its head. It goes for a schema-on-read approach, meaning you apply the structure when you read the data, not when you store it. This flexibility is key when you're handling data like emails, videos, or sensor readings that don't play nicely with tidy rows and columns.

Structured data often needs normalization to cut down on redundancy and keep things consistent, but that can make updates tricky as relationships get more tangled. Unstructured data avoids this hassle by letting data be, well, just as it is. That makes storing different types of information easier, though it can be more challenging to maintain consistency.

These differences shape how you model your data. Structured systems need careful planning up front—great for order, but not so flexible. Unstructured systems give you agility, letting you adapt on the go without major overhauls.

2. Query capabilities and data access

Structured data uses SQL to deliver powerful, precise queries. Want to grab sales data for a certain region and time? A nicely written SQL query will get you there fast. Because the data is organized, indexed, and consistent, these queries run efficiently and reliably.

Unstructured data needs a different approach. It might use document-based queries, key-value lookups, or full-text searches. This lets you query flexibly, which is great for varied data types, but it can also add complexity and hit performance.

Pulling specific insights from heaps of unstructured data often calls for specialized tools and advanced techniques, such as extracting data effectively for meaningful insights and ELT processes.

When it comes to reporting and analytics, structured data makes life easy. Its rigidity allows for real-time analytics and seamless integration with business intelligence tools. Unstructured data, despite its wealth of potential insights, might need extra processing to get the data ready for analysis.

3. Performance and scalability considerations

With structured data, performance is typically steady and predictable, all due to its defined schemas and indexing. It's built for transaction processing, making sure operations are rock-solid and follow ACID properties. That makes it perfect for situations where accuracy and reliability are a must, like financial transactions.

However, scaling structured data isn't always easy. Vertical scaling—beefing up your existing server—can get pricey and only takes you so far. Changing the schema significantly to handle growth needs careful planning and can throw a wrench into operations.

Unstructured data, though, is designed to scale. It supports horizontal scaling, spreading data across multiple servers. That makes it great for handling huge amounts of rapidly growing data, like logs from a bunch of IoT devices.

Read/write performance differs between them. Structured data gives you quick reads thanks to efficient indexing, but writes can slow down if you're updating complex relationships. Unstructured data can handle speedy writes and is tuned for taking in large data volumes, but reads might lag if the data isn't well-indexed.

Use cases and practical applications of structured and unstructured data

Organizations across industries leverage structured and unstructured data in different ways, depending on their specific needs and objectives. From powering mission-critical operations to enabling innovative analytics, these complementary approaches serve distinct purposes in the modern data ecosystem.

Structured data use cases

Structured data serves as the foundation for critical business systems where reliability and precision are paramount. In financial services, structured databases power everything from real-time transaction processing to regulatory reporting, allowing institutions to maintain perfect accuracy in account management.

Retailers rely on structured data for inventory management across multiple locations, tracking stock levels, product performance, and supply chain operations with precise accuracy. This structured approach ensures they can make data-driven decisions about purchasing, pricing, and promotions based on reliable information.

Similarly, healthcare providers build administrative systems on structured foundations, managing patient records, appointments, and billing through organized relational databases that support their data-driven healthcare initiatives.

Enterprise resource planning systems exemplify structured data's strengths, integrating functions across finance, HR, manufacturing, and supply chain through highly organized data models. These interconnected systems would be impossible without the referential integrity and relationship management that structured approaches provide.

Customer relationship management represents another domain where structured data excels, giving sales teams a comprehensive, organized view of customer interactions, preferences, and history to drive personalized engagement strategies.

Unstructured data use cases

Unstructured data unlocks insights in scenarios where information doesn't conform to predefined categories. Media and entertainment companies manage vast repositories of digital assets, including videos, images, and documents, leveraging unstructured approaches to handle diverse file formats and metadata.

This flexibility allows them to store, retrieve, and analyze content without forcing it into rigid structures that would lose important context or meaning.

Marketing teams increasingly depend on unstructured social media data to understand consumer sentiment and trends, analyzing millions of posts, comments, and interactions that couldn't be adequately captured in traditional tables.

In healthcare, unstructured data drives diagnostic innovation through imaging data, clinical notes, and research papers. Medical professionals leverage these diverse sources to improve patient outcomes that combine structured patient records with unstructured clinical information.

Manufacturing facilities also process massive streams of sensor data from IoT devices, using unstructured approaches to capture and analyze information that varies widely in format, frequency, and importance but contains valuable operational insights.

Practical integration approaches

Most organizations achieve the greatest success by implementing hybrid approaches that leverage both data types. Modern data lakes, warehouses, and lakehouses combine structured reliability with unstructured flexibility, creating environments where diverse data can coexist while maintaining appropriate governance.

This unified approach allows organizations to apply consistent analytics across historically siloed information sources, effectively overcoming data silos.

Multi-model databases have emerged to support various data representations within a single system, reducing the complexity of managing separate platforms for different data types. Meanwhile, specialized data integration methods bridge structured and unstructured systems, enabling smooth data movement between environments without losing context or meaning.

Furthermore, API-driven architectures and microservices create standardized interfaces for accessing diverse data sources, abstracting away the underlying complexity for business users who need insights rather than technical details.

The most forward-thinking organizations implement unified analytics platforms that provide consistent analysis capabilities across all data types, democratizing access to insights while maintaining appropriate controls.

These comprehensive approaches recognize that the structured/unstructured dichotomy should be invisible to business users, who simply need reliable information to drive decisions regardless of how it's stored.

Tools and technologies for unifying structured and unstructured data

Organizations need specialized technologies to effectively manage diverse data types. Modern platforms like Databricks offer sophisticated tools for handling both structured and unstructured data through a unified approach.

Databricks makes handling both structured and unstructured data easier, leveraging cloud data engineering for enhanced data handling. With a unified storage layer, Databricks lets you keep all your data in one spot, no matter the format. That wipes out the need for separate systems and cuts down on the headache of managing diverse data types.

Databricks' flexible processing engine works smoothly with multiple data formats, modernizing ETL by processing JSON, CSV, images, or streaming data efficiently without a lot of reconfiguring. Incorporating AI in ETL can further streamline data processing and unlock advanced analytics. Organizations can fully utilize these capabilities by mastering ETL on Databricks.

One standout feature is the simplified query interface. Databricks supports several programming languages—Python, SQL, and Scala—so different users can work in the language they're comfortable with. With schema inference, you can handle unstructured data more intuitively, as the platform helps figure out the structure you need when you read the data.

In addition, Databricks uses a lakehouse architecture, blending the best of data warehouses and data lakes. You get a warehouse's governance and performance along with a lake's scalability and flexibility.

The lakehouse architecture fundamentally changes how organizations approach their data strategies, explains Roberto Salcido, System Architect at Databricks. By eliminating the need to maintain separate systems for different data types, teams can focus on extracting insights rather than managing infrastructure. This setup overcomes earlier limits by offering ACID transactions, scalable metadata handling, and unified governance across all data types.

Enabling governed self-service analytics for all data types

While modern tools like Databricks provide powerful capabilities for unifying diverse data, organizations still struggle to provide widespread access while maintaining control. Business users need self-service tools to prepare and analyze all types of data, but traditional approaches force an impossible choice between restrictive access that creates bottlenecks and ungoverned usage that introduces security and quality risks.

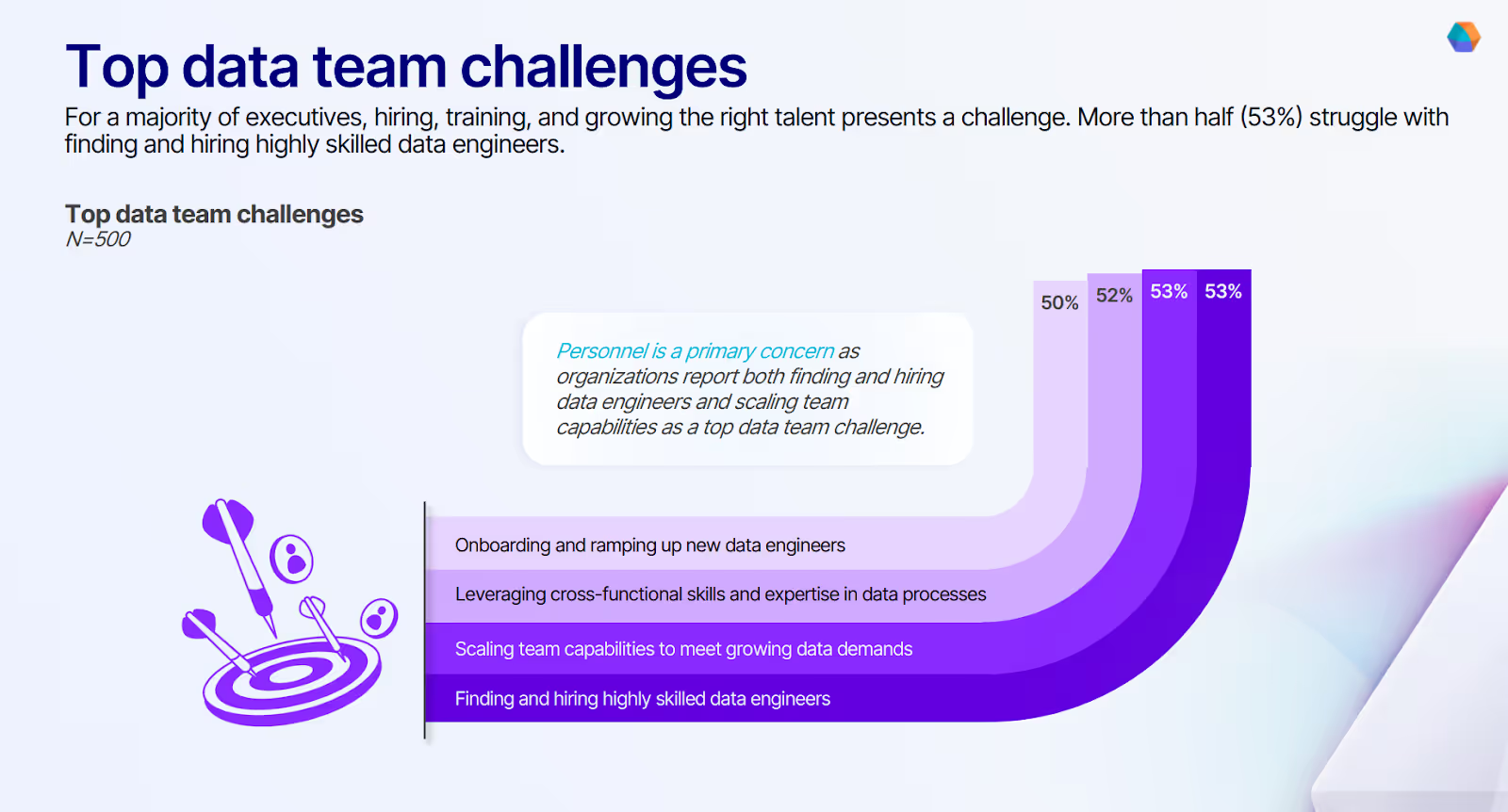

These challenges are intensified by widespread talent constraints. For a majority of executives, hiring, training, and growing the right talent presents a significant obstacle. Data leaders struggle with finding and hiring highly skilled data engineers, making self-service platforms increasingly critical for maximizing the productivity of existing teams.

Prophecy transforms this landscape by enabling governed self-service data preparation that works seamlessly with multiple data sources.

Here's how Prophecy's governed self-service approach delivers compelling advantages:

- Intuitive visual interface: Analysts build pipelines through a drag-and-drop experience that requires no coding expertise, making data preparation accessible regardless of technical background.

- AI-powered assistance: Built-in intelligence helps users understand and transform complex data, suggesting optimal approaches based on data characteristics and common patterns.

- Enterprise governance: Centralized controls ensure security and compliance without hampering productivity, maintaining full visibility for data platform teams.

- Databricks integration: Native connection to Databricks preserves all security policies through Unity Catalog, ensuring consistent governance across environments.

- End-to-end capabilities: Complete workflow support from data loading to transformation to reporting eliminates the need to switch between multiple tools and platforms.

To eliminate the difficult choice between restrictive data access that creates bottlenecks and ungoverned usage that introduces security risks, explore How to Build Data Pipelines on Databricks in 5 Easy Steps to democratize data preparation while maintaining enterprise governance.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar